1 Introduction

Recently, there has been a certain interest in the literature about the convergence of the empirical means of a sequence of realizations of the solution of a stochastic differential equation (SDE) with random coefficients as a mean to construct new classes of stochastic processes. For instance, K. El-Sebaiy and co-authors, in a sequence of papers [10, 11, 8] have constructed several classes of Ornstein–Uhlenbeck type processes led by a fractional Brownian motion ${B^{H}}(t)$ or a Hermite process ${Z_{q}^{H}}(t)$ of order $q\ge 1$.

The construction of this kind kind of processes was introduced in [13] in the analysis of a stationary Ornstein–Uhlenbeck process

with initial condition ${X_{k}}(0)=0$, where $W=\{W(t),\hspace{2.5pt}t\ge 0\}$ is a real standard Brownian motion defined on a stochastic basis $(\Omega ,\mathcal{F},\{{\mathcal{F}_{t}},\hspace{2.5pt}t\in \mathbb{R}\},\mathbb{P})$ and $\{{\alpha _{k}},\hspace{2.5pt}k\ge 1\}$ is a sequence of independent, identically distributed random variables, independent of W. With no loss of generality, we may assume that the sequence of ${\alpha _{k}}$ is defined on a different probability space $(\bar{\Omega },\bar{\mathcal{F}},\bar{\mathbb{P}})$.

The empirical means are defined by

Under the assumption that ${\alpha _{k}}$ are independent, identically distributed, with a Gamma distribution $\Gamma (\mu ,\lambda )$, with shape parameter $\mu >\frac{1}{2}$ and scale parameter $\lambda >0$, in [13] the authors prove that $\bar{\mathbb{P}}$-a.s. the sequence ${({Y_{n}}(t))_{n}}$ converges to a process $Y(t)$ for any $t\in \mathbb{R}$ and in $C([a,b])$ for arbitrary $a < b$, where the process $Y(t)$ is defined in law by

In order to extend the above construction, we recall the notion of stochastic Volterra processes defined as convolution processes between a given kernel and a Brownian motion W

Such processes are related to (and somehow coincide with a subclass of) ambit processes, as introduced by Barndorff-Nielsen [3, 4], and in particular to the so-called Brownian semistationary processes, see [2].

These processes cannot be expressed in the Itô differential form (1), except in the case of an exponential kernel $g(t,s)={e^{\lambda (t-s)}}$, due to the presence of a memory term. It is however possible to describe their behaviour through an integral stochastic (Volterra) equation. Although this process is not Markovian, several properties can be studied, see for instance [6] for a survey of known results and the relation with the fractional Brownian motion.

In analogy with the existing literature, we aim to construct a class of stochastic Volterra processes via an aggregation procedure (i.e. taking the limit of the empirical means). Thanks to this approach, we are able to prove some of its properties. We shall refer to our processes as fractional Ornstein–Uhlenbeck processes, since they are given as solutions of the integral equation

with initial condition ${X_{k}}(0)=0$, where ∗ denotes the convolution product and

denotes the fractional integration kernel for any $\rho >0$, cf. [18]. It is well known [6, 7] that the solution of (3) is a Gaussian process defined in terms of the scalar resolvent equation

by means of the formula

The scalar resolvent function ${s_{\alpha }}(t)$ can be defined in terms of the well known Mittag-Leffler function ${E_{\rho }}(t)$ [15]; this link will be exploited in order to prove finer properties of the function ${s_{\alpha }}(t)$, see Section 2. Notice further that the choice $\rho =1$ reduces the problem to the evolution equation studied in [13]. In this case, ${s_{\alpha }}(t)={e^{-\alpha t}}$ satisfies the semigroup property, ${X_{k}}$ is a Markov process and its behaviour at infinity is much easier to study than in the case $1<\rho \le 2$.

(3)

\[ {X_{k}}(t)=-{\alpha _{k}}\left({g_{\rho }}\ast {X_{k}}\right)(t)+W(t),\hspace{2em}k\ge 1,\hspace{1em}t>0,\](4)

\[ {s_{\alpha }}(t)+\alpha {\int _{0}^{t}}{g_{\rho }}(t-\tau ){s_{\alpha }}(\tau )\hspace{0.1667em}d\tau =1,\hspace{2em}t\ge 0,\hspace{1em}\alpha \ge 0,\]1.1 Presentation of the results and outline of the paper

In this paper, we assume that

and we assume that the fractional integration parameter ρ satisfies

Notice that condition (6) is quite standard in the literature, compare, e.g., [13]. In our setting, we prove that the sequence of the empirical mean processes ${Y_{n}}$ (given in (2)) converges in ${L^{2}}(\mathbb{P})$, for fixed time, $\bar{\mathbb{P}}$-a.s., to a stochastic process $Y(t)$ given by the convolution Wiener integral

where $\bar{\mathbb{E}}$, the expectation with respect to $\bar{\mathbb{P}}$, of the resolvent scalar kernel with respect to the random variable α can be explicitly computed and related to a generalized Wright–Fox function ${G_{\rho }^{\mu }}(z)$, see Section 2.2 below. In Section 3, we prove that ${Y_{n}}(t)$ converges pathwise on $[0,T]$, $\bar{\mathbb{P}}$-a.s., for arbitrary $T>0$. First, in Theorem 2, we prove weak convergence of the laws in the space of continuous trajectories on $[0,T]$, by proving a suitable tightness result. Then, in Theorem 3, we prove that the convergence holds almost surely, by using a particular application of the integration by parts formula.

Finally, in Section 4 we consider the asymptotic behaviour of the limit process $Y(t)$ as $t\to \infty $. Notice that in the previous section no condition was imposed on the values of the parameters μ and λ defining the α-distribution. In this section, we shall impose the bound

notice that this condition is coherent with the condition $\mu >\frac{1}{2}$ imposed in [13] for the evolutionary problem ($\rho =1$).

We show that the process $Y(t)$ converges in distribution, for $t\to \infty $, to some Gaussian random variable η and we give a characterization of the limit as “stationary” solution of the problem. The emphasis here comes from the fact that we cannot use the notion of invariant measures for SDEs, since our system is not Markovian. However, if we consider the solution of a modified problem with initial time equal to $-\infty $, we have that this process has the law equal to that of η for all times.

2 Some special functions

2.1 Mittag-Leffler function

We introduce the Mittag-Leffler function ${E_{\rho }}(z)$ defined by the series

for any $\rho >0$. Notice that this is a generalization of the exponential function, obtained as a special case when $\rho =1$. This function was introduced by Mittag-Leffler in [15], and since then studied by many authors. Basic references for the next results are [9, 12].

(9)

\[ {E_{\rho }}(z)={\sum \limits_{k=0}^{\infty }}\frac{{z^{k}}}{\Gamma (\rho k+1)},\hspace{2em}z\in \mathbb{C},\]The series in (9) converges in the whole convex plane for $\rho >0$. For $x\in \mathbb{R}$, $x\to \infty $, we get the following asymptotic expansion [12, (3.4.15)]: for any $m\ge 1$ we have

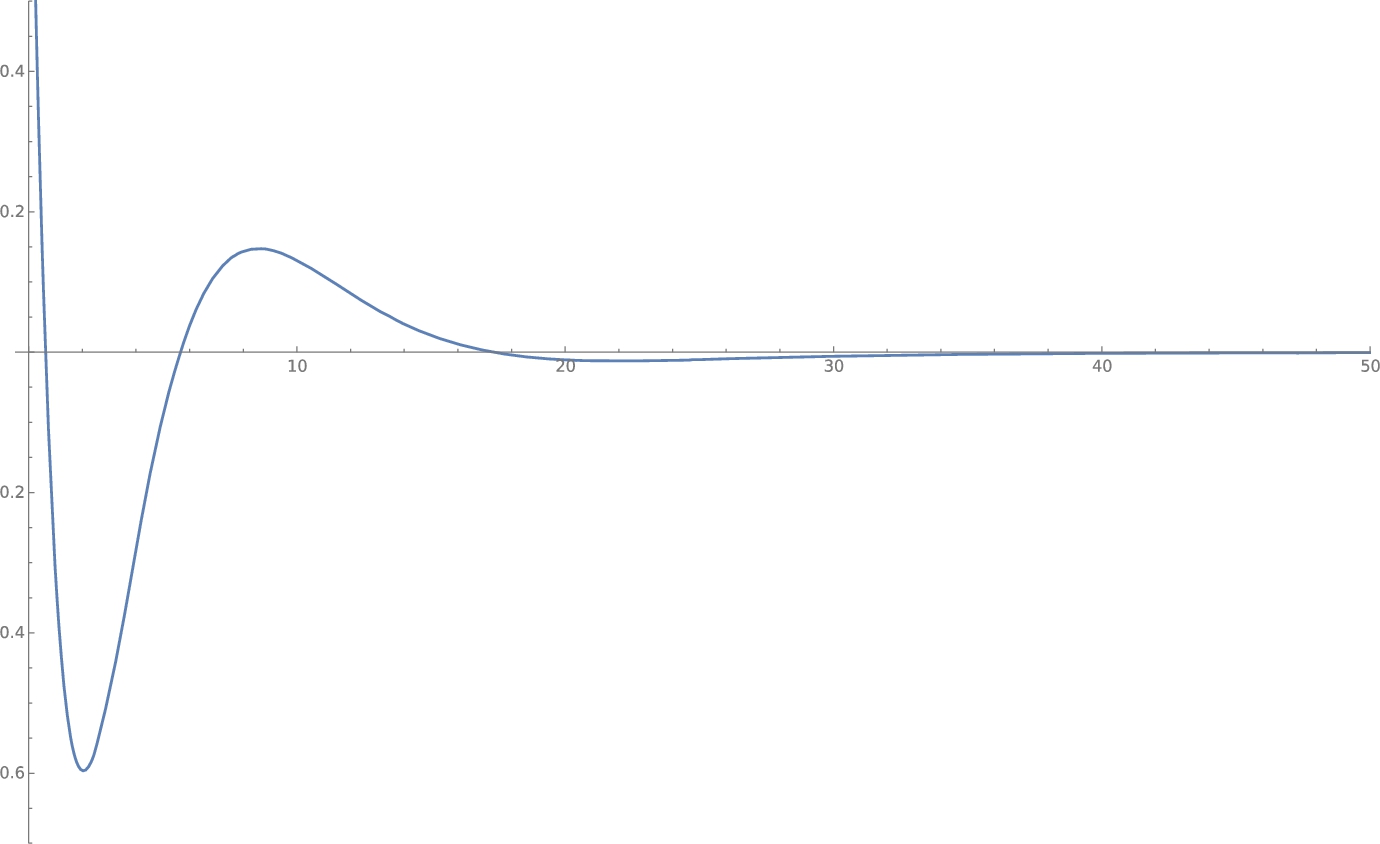

The case $0<\rho <1$ is somehow peculiar because the Mittag-Leffler function ${E_{\rho }}(-x)$ is completely monotonic, that is for all $n\in \mathbb{N}$, for all $x\in {\mathbb{R}_{+}}$, ${(-1)^{n}}{E_{\rho }^{(n)}}(-x)\ge 0$. It follows in particular that ${E_{\rho }}(-x)$ has no real zeroes for $x\in {\mathbb{R}_{+}}$ when $0<\rho <1$. Conversely, in the region $1<\rho <2$, the Mittag-Leffler function ${E_{\rho }}(-x)$ shows a damped oscillation converging to zero as $x\to \infty $, as can be seen in Figure 1.

Fig. 1.

The graph of the Mittag-Leffler function ${E_{\rho }}(-x)$ with parameter $\rho =1.9$. Plotted with Mathematica [20]

The global behaviour of the Mittag-Leffler function ${E_{\rho }}(-x)$ is established in [12, Corollary 3.1]:

for some constant M depending only on ρ.

Recall that, by definition, the two-parametric Mittag-Leffler function is (see, for example, [12]),

\[ {E_{\rho ,\tau }}(z)={\sum \limits_{k=0}^{\infty }}\frac{{z^{k}}}{\Gamma (\rho k+\tau )},\hspace{2em}z\in \mathbb{C},\mathrm{\Re }(\rho )>0,\tau \in \mathbb{C}.\]

As for the original Mittag-Leffler function ${E_{\rho }}(z)$, also ${E_{\rho ,\rho }}(z)$ is an entire function of order $\omega =\frac{1}{\rho }$. Moreover, it is proved in [17, (1.2.5)] that a similar asymptotic expansion to the one given in (10) for the Mittag-Leffler function holds for the two-parametric Mittag-Leffler function:

\[ {E_{\rho ,\rho }}(-x)=-{\sum \limits_{k=1}^{m}}\frac{{(-x)^{-k}}}{\Gamma [\rho -k\rho ]}+O(|x{|^{-m-1}}).\]

However, since $\frac{1}{\Gamma [-n]}=0$ for any integer $n\ge 1$, we see that the previous estimate becomes, for any $m\ge 1$,

We are thus led to the following global estimate for the behaviour of the two-parametric Mittag-Leffler function ${E_{\rho ,\rho }}(-x)$:

for some constant ${M_{2}}$ depending only on ρ.2.2 A generalized Wright–Fox type function

In this paper, we will encounter the following special function,

\[ {G_{\rho }^{\mu }}(z)={\sum \limits_{k=0}^{\infty }}{a_{k}}{z^{k}}={\sum \limits_{k=0}^{\infty }}\frac{{\left(\mu \right)_{k}}}{\Gamma (k\rho +1)}{z^{k}},\]

where ${(\mu )_{n}}=\frac{\Gamma (\mu +n)}{\Gamma (\mu )}$ is the Pochhammer symbol. This function has some similarities to the Mittag-Leffler function, and in fact both are special cases of the generalized Wright–Fox type function, defined as

\[ {_{p}^{}}{\Psi _{q}}\left[\begin{array}{c}({a_{1}},{A_{1}}),\dots ,({a_{p}},{A_{p}})\\ {} ({b_{1}},{B_{1}}),\dots ,({b_{q}},{B_{q}})\end{array};z\right]={\sum \limits_{k=0}^{\infty }}\frac{{\textstyle\textstyle\prod _{i=1}^{p}}\Gamma ({a_{i}}+{A_{i}}k)}{{\textstyle\textstyle\prod _{j=1}^{q}}\Gamma ({b_{j}}+{B_{j}}k)}\frac{{z^{k}}}{k!},\]

where the series converges, with ${a_{i}},{b_{j}}\in \mathbb{C}$ and ${A_{i}},{B_{j}}\in \mathbb{R}$, see [14]. We recover both the two-parametric Mittag-Leffler function ${E_{\rho ,\tau }}(z)$ and ${G_{\rho }^{\mu }}(z)$ in ${_{p}^{}}{\Psi _{q}}$ with the following choices:

In the next lemma, we prove that ${G_{\rho }^{\mu }}(z)$ is well defined and that it is an entire function, as was ${E_{\rho }}$.

Lemma 2.

Assume that $\rho >1$. Then the function ${G_{\rho }}(z)$ is an entire function of the variable $z\in \mathbb{C}$ of order $\omega =\frac{1}{\rho -1}$.

Proof.

Since ${G_{\rho }^{\mu }}$ is defined in terms of a power series, to prove that it is an entire function it is sufficient to study the radius of convergence. By the ratio test, it is sufficient to study the behaviour of

\[\begin{aligned}{}R& =\underset{n\to \infty }{\lim }\frac{{a_{n}}}{{a_{n+1}}}\\ {} & =\underset{n\to \infty }{\lim }\frac{\Gamma (\mu )\Gamma (\rho n+\rho +1)}{\Gamma (\mu +n+1)}\frac{\Gamma (\mu +n)}{\Gamma (\mu )\Gamma (\rho n+1)}=\frac{1}{\mu +n}\frac{\Gamma (\rho n+\rho +1)}{\Gamma (\rho n+1)}.\end{aligned}\]

Stirling’s series can be used to find the asymptotic behaviour of the last ratio [19]:

\[ \frac{\Gamma (\rho n+1)}{\Gamma (\rho n+\rho +1)}={(\rho n)^{-\rho }}\left(1+O({n^{-1}})\right),\]

therefore, by taking the limit

\[ R=\underset{n\to \infty }{\lim }\frac{{a_{n}}}{{a_{n+1}}}=\underset{n\to \infty }{\lim }\frac{{n^{\rho }}}{\mu +n},\]

we see that R is infinite provided $\rho >1$.By using again Stirling’s approximation, it is possible to compute the order ω of the entire function ${G_{\rho }^{\mu }}(z)$. Recall that ω is the infimum of all κ such that

eventually for $r\to \infty $. For the entire function ${G_{\rho }^{\mu }}(z)=\textstyle\sum {a_{k}}{z^{k}}$, the order is given by the formula

for large k, we have

\[\begin{aligned}{}\frac{1}{{a_{k}}}\approx & \frac{{e^{-k\rho }}{(k\rho )^{k\rho +1/2}}}{{e^{-k}}{(k)^{k+\frac{1}{2}+\mu }}},\\ {} \log (1/{a_{k}})\approx & (\rho -1)k\log (k)+(1-\rho )k+(k\rho +1/2)\log (\rho )-\mu \log (k),\end{aligned}\]

which implies $\omega =\frac{1}{\rho -1}$. □Remark 1.

Further properties of the function ${G_{\rho }^{\mu }}$ will be proved in the sequel. In particular, as a corollary of the computation in Lemma 7, we obtain the following asymptotic behaviour for large x:

for $\mu \ne 1$. A plot of he restriction of ${G_{\rho }^{\mu }}$ to the negative real numbers can be seen in Figure 2.

(14)

\[ \left|{G_{\rho }^{\mu }}(-x)\right|\le C\left(\frac{1}{x}+\frac{1}{{x^{\mu }}}\right),\hspace{2em}x\to \infty ,\]Fig. 2.

The graph of the function ${G_{\rho }^{\mu }}(-x)$ with parameters $\mu =4$ and $\rho =1.9$. Plotted with Mathematica [20]

3 The empirical mean process

We consider in this section the solution of the fractional evolution equation (3) and the properties of the empirical mean process ${Y_{n}}(t)$ defined in (2).

The relation between Mittag-Leffler function and Riemann–Liouville fractional derivative is specified through the following relation [12, (3.7.44)]

\[ {E_{\rho }}(\lambda {t^{\rho }})-\lambda {\int _{0}^{t}}{E_{\rho }}(\lambda {s^{\rho }}){g_{\rho }}(t-s)\hspace{0.1667em}\mathrm{d}s=1.\]

By comparing it with the definition of the scalar resolvent function ${s_{\alpha }}(t)$ in (4) we obtain the relation

For the sake of completeness, let us recall the behaviour of the Gamma distribution.

Proposition 1.

Assume that α is a random variable on the probability space $(\bar{\Omega },\bar{\mathcal{F}},\bar{\mathbb{P}})$ with a Gamma distribution of parameters μ and λ, i.e. α has the density

Then the moments of α are given by

We apply this proposition to compute the expected value (with respect to the measure $\bar{\mathbb{P}}$) of the random process ${s_{\alpha }}(t)$.

Lemma 3.

Assume that α has a Gamma distribution of parameters μ and λ on the probability space $(\bar{\Omega },\bar{\mathcal{F}},\bar{\mathbb{P}})$ . Then the expected value of ${s_{\alpha }}(t)$ satisfies

Proof.

Let us consider the representation of the scalar resolvent kernel ${s_{\alpha }}(t)$ in terms of the Mittag-Leffler function ${E_{\rho }}(x)$ given in (15) and the definition of the Mittag-Leffler function as an entire function given in (9):

\[ \bar{\mathbb{E}}[{s_{\alpha }}(t)]=\bar{\mathbb{E}}\left[{\sum \limits_{k=0}^{\infty }}\frac{{(-1)^{k}}}{\Gamma (k\rho +1)}{\alpha ^{k}}{t^{k\rho }}\right].\]

By an application of Fubini’s theorem we obtain

\[ \bar{\mathbb{E}}[{s_{\alpha }}(t)]={\sum \limits_{k=0}^{\infty }}\frac{{(-1)^{k}}}{\Gamma (k\rho +1)}\bar{\mathbb{E}}\left[{\alpha ^{k}}\right]{t^{k\rho }},\]

and by (16) we obtain

□3.1 The limit of the empirical mean process

In this section, we will study the asymptotic behaviour of the empirical mean process

\[ {Y_{n}}(t)={\int _{0}^{t}}\frac{1}{n}{\sum \limits_{k=1}^{n}}{s_{{\alpha _{k}}}}(t-\tau )\hspace{0.1667em}\mathrm{d}W(\tau ).\]

By the law of large numbers

\[ \frac{1}{n}{\sum \limits_{k=1}^{n}}{s_{{\alpha _{k}}}}(t)\longrightarrow \bar{\mathbb{E}}[{s_{\alpha }}(t)],\hspace{2em}t>0,\]

hence a natural candidate for the limit process of ${Y_{n}}(t)$ is given by

\[ Y(t)={\int _{0}^{t}}{G_{\rho }^{\mu }}\left(-\frac{{(t-s)^{\rho }}}{\lambda }\right)\hspace{0.1667em}\mathrm{d}W(s).\]

Theorem 1.

The sequence ${Y_{n}}(t)$ converges in ${L^{2}}(\Omega ,\mathbb{P})$, uniformly in $[0,T]$, to $Y(t)$, $\bar{\mathbb{P}}$-a.s.

Proof.

In order to prove the convergence of ${Y_{n}}$ to Y, we first notice that

satisfies, by estimate (11),

therefore, the same applies to the function ${G_{\rho }^{\mu }}(-{t^{\rho }}/\lambda )$, and $Y(t)$ is a Gaussian process.

Moreover, the function ${s_{\alpha }}(t)$ is continuous and bounded for $\alpha \in {\mathbb{R}_{+}}$ and $t\in [0,T]$, for arbitrary $T>0$, then the uniform law of large numbers [16, Lemma 2.4] implies that ${G_{\rho }^{\mu }}(-{t^{\rho }}/\lambda )$ is continuous and bounded in $t\in [0,T]$ and

It follows from the dominated convergence theorem that

□

3.2 Weak convergence of the empirical mean process in $C([0,T])$

We have proved before that ${Y_{n}}(t)$ and $Y(t)$ are, $\bar{\mathbb{P}}$-a.s., Gaussian, continuous stochastic processes on $(\Omega ,\mathcal{F},\mathbb{P})$.

In the remaining part of this section, the whole construction is thought to hold $\bar{\mathbb{P}}$-a.s., meaning that we consider α given and we are in the space $(\Omega ,\mathcal{F},\mathbb{P})$. We aim to consider the $\mathbb{P}$-weak convergence of ${Y_{n}}$ to Y in the space $C([0,T])$:

\[\begin{array}{c}\displaystyle {Y_{n}}\Rightarrow Y\hspace{2.5pt}\text{if and only if}\hspace{2.5pt}\phi ({Y_{n}})\to \phi (Y)\hspace{2.5pt}\text{in distribution,}\hspace{2.5pt}\\ {} \displaystyle \text{for every bounded Borel measurable}\hspace{2.5pt}\phi :C([0,T])\to \mathbb{R}\text{.}\end{array}\]

Theorem 2.

The sequence ${Y_{n}}$ $\mathbb{P}$-weakly converges to the process Y in the space $C([0,T])$, $\bar{\mathbb{P}}$-almost surely.

By [5, Theorems 12.3 and 8.1], weak convergence of ${Y_{n}}$ to Y follows if we prove that the following two conditions hold:

-

(1) convergence of the finite dimensional distributions, and

-

(2) the moment condition where $C(\kappa ,\delta )$ depends on the constants κ and δ only, compare [5, formula (12.51)].

We shall prove each of the above-described conditions in the following two lemmas, which therefore together imply the thesis of the theorem.

Lemma 4.

For any $k\in \mathbb{N}$, ${t_{1}},\dots ,{t_{k}}\in [0,T]$, ${x_{1}},\dots ,{x_{k}}\in \mathbb{R}$, it holds that $\mathbb{P}({Y_{n}}({t_{1}})\le {x_{1}},\dots ,{Y_{n}}({t_{k}})\le {x_{k}})$ converges to $\mathbb{P}(Y({t_{1}})\le {x_{1}},\dots ,Y({t_{k}})\le {x_{k}})$ as $n\to \infty $.

Proof.

Both ${Y_{n}}$ and Y are Gaussian processes, so their finite dimensional distributions are fully determined by their mean and covariance. For any $k\in \mathbb{N}$, ${t_{1}},\dots ,{t_{k}}\in [0,T]$, the mean and covariance of ${Y_{n}}({t_{1}}),\dots ,{Y_{n}}({t_{k}})$ converge to mean and covariance of $Y({t_{1}}),\dots ,Y({t_{k}})$, thanks to Theorem 1 and the pointwise (in t) convergence of ${Y_{n}}(t)\to Y(t)$. □

Lemma 5.

The moment condition (17) holds, and the family of empirical mean processes ${\{{Y_{n}}\}_{n}}$ is tight.

Proof.

First, we notice that by exploiting the Gaussianity of ${Y_{n}}$, it is sufficient to search an estimate for $\kappa =2$. Further, we can reduce the problem to the estimate of a single ${X_{k}}$, since

\[ \mathbb{E}[|{Y_{n}}(t)-{Y_{n}}(s){|^{2}}]\le \frac{1}{n}{\sum \limits_{k=1}^{n}}\mathbb{E}[|{X_{k}}(t)-{X_{k}}(s){|^{2}}].\]

Next, we write (for simplicity, we drop the index k in the following computation)

\[\begin{aligned}{}\mathbb{E}[|X(t)-& X(s){|^{2}}]\\ {} & =\mathbb{E}{\left|{\int _{0}^{s}}[{s_{\alpha }}(t-u)-{s_{\alpha }}(s-u)]\hspace{0.1667em}\mathrm{d}W(u)+{\int _{s}^{t}}{s_{\alpha }}(t-u)\hspace{0.1667em}\mathrm{d}W(u)\right|^{2}}\\ {} & \le 2{\int _{0}^{s}}{[{s_{\alpha }}(t-u)-{s_{\alpha }}(s-u)]^{2}}\hspace{0.1667em}\mathrm{d}u+2{\int _{s}^{t}}{[{s_{\alpha }}(t-u)]^{2}}\hspace{0.1667em}\mathrm{d}u\\ {} & \le 2{\int _{0}^{s}}{[{s_{\alpha }}(t-s+u)-{s_{\alpha }}(u)]^{2}}\hspace{0.1667em}\mathrm{d}u+2{\int _{0}^{t-s}}{[{s_{\alpha }}(u)]^{2}}\hspace{0.1667em}\mathrm{d}u.\end{aligned}\]

Recall that, by estimate (11), $|{s_{\alpha }}(t)|=|{E_{\rho }}(-\alpha {t^{\rho }})|\le M$, hence the second integral in the right hand side of previous estimate is bounded by $2{M^{2}}(t-s)$. As far as the first term is concerned, we get

\[\begin{aligned}{}{\int _{0}^{s}}[{s_{\alpha }}(t-& s+u)-{s_{\alpha }}{(u)]^{2}}\hspace{0.1667em}\mathrm{d}u\\ {} & ={\int _{0}^{s}}{[{E_{\rho }}(-\alpha {(t-s+u)^{\rho }})-{E_{\rho }}(-\alpha {u^{\rho }})]^{2}}\hspace{0.1667em}\mathrm{d}u\\ {} & \stackrel{[v={\alpha ^{1/\rho }}u]}{=}\hspace{2em}{\alpha ^{-1/\rho }}{\int _{0}^{{\alpha ^{1/\rho }}s}}{[{E_{\rho }}(-{({\alpha ^{1/\rho }}(t-s)+v)^{\rho }})-{E_{\rho }}(-{v^{\rho }})]^{2}}\hspace{0.1667em}\mathrm{d}v\\ {} & ={\alpha ^{-1/\rho }}{\int _{0}^{{\alpha ^{1/\rho }}s}}{[{s_{1}}({\alpha ^{1/\rho }}(t-s)+v)-{s_{1}}(v)]^{2}}\hspace{0.1667em}\mathrm{d}v\\ {} & ={\alpha ^{-1/\rho }}{\int _{0}^{{\alpha ^{1/\rho }}s}}{\left|{\int _{v}^{v+{\alpha ^{1/\rho }}(t-s)}}{s^{\prime }_{1}}(x)\hspace{0.1667em}\mathrm{d}x\right|^{2}}\hspace{0.1667em}\mathrm{d}v.\end{aligned}\]

The derivative of the scalar resolvent kernel can be written in terms of the two-parametric Mittag-Leffler function, see formula (12)

\[ {s^{\prime }_{1}}(x)=\frac{d}{dx}{E_{\rho }}(-{x^{\rho }})=-{x^{\rho -1}}{E_{\rho ,\rho }}(-{x^{\rho }}).\]

Recalling the bound (13) we see that

\[ \left|{s^{\prime }_{1}}(x)\right|\le {x^{\rho -1}}\frac{{M_{2}}}{1+{x^{\rho }}}\le \frac{{M_{3}}}{1+x},\]

for some constant ${M_{3}}$.In conclusion, we get

This implies

where the constant ${K_{n}}$ can be explicitly given as

\[ {K_{n}}=2{M_{3}^{2}}{T^{2}}\left(\frac{1}{n}{\sum \limits_{k=1}^{n}}{({\alpha _{k}})^{2/\rho }}\right)+2{M^{2}}.\]

Notice that, $\bar{\mathbb{P}}$-almost surely,

\[ {K_{n}}{\xrightarrow[n\to \infty ]{}}\bar{K}=2{M_{3}^{2}}{T^{2}}\bar{\mathbb{E}}[{\alpha ^{2/\rho }}]+2{M^{2}}.\]

It follows that for some ${n_{0}}\in \mathbb{N}$, ${K_{n}}\le \bar{K}+1$ for any $n\ge {n_{0}}$. Set

Since ${Y_{n}}(t)-{Y_{n}}(s)$ is a centred Gaussian random variable, by standard arguments we conclude that $\mathbb{E}[|{Y_{n}}(t)-{Y_{n}}(s){|^{2n}}]\le C(2n,n-1)|t-s{|^{n}}$ and $C(2n,n-1)=(2n-1)!!\hspace{0.1667em}C{(2,0)^{n}}$. □3.3 Almost sure convergence of the empirical mean process in $C([0,T])$

In this section, we consider the convergence of the paths of ${Y_{n}}$ to Y in $C([0,T])$ with respect to the uniform topology.

Our proof will follow from a somehow broader result. Let ${f_{n}}$ be a sequence of smooth functions on $[0,T]$ that converges (at least pointwise) to f. We shall see later which further assumptions are required. Then we compute

\[\begin{aligned}{}({f_{n}}\ast & \dot{W})(t)-(f\ast \dot{W})(t)\\ {} & ={f_{n}}(t-s)W(s){\big|_{s=0}^{s=t}}+(W\ast {\dot{f}_{n}})(t)-f(t-s)W(s){\big|_{s=0}^{s=t}}-(W\ast \dot{f})(t)\\ {} & =[{f_{n}}(0)-f(0)]W(t)+(W\ast \dot{({f_{n}}-f)})(t).\end{aligned}\]

It follows that there exists a constant C finite $\mathbb{P}$-a.s. such that

Therefore, conditions sufficient for the required convergence are as follows:

Then an application of Lebesgue’s dominated convergence theorem shows that the following lemma holds.Lemma 6.

Under conditions 1. to 3., the stochastic convolution processes ${X_{n}}=({f_{n}}\ast \dot{W})$ converge in $C([0,T])$ (endowed with the uniform topology) to the process $X=(f\ast \dot{W})$.

We are now ready to prove the main result of this section.

Proof of Theorem 3.

With the notation

\[ {f_{n}}(t)=\frac{1}{n}{\sum \limits_{k=1}^{n}}{s_{{\alpha _{k}}}}(t),\hspace{2em}f(t)={G_{\rho }^{\mu }}(-{t^{\rho }}/\lambda ),\]

introduced above, we see that

\[ \dot{{f_{n}}}(t)=\frac{1}{n}{\sum \limits_{k=1}^{n}}\frac{\mathrm{d}}{\mathrm{d}t}{s_{{\alpha _{k}}}}(t),\]

and by using the identity ${s_{\alpha }}(t)={E_{\rho }}(-\alpha {t^{\rho }})$ and formula (12) we compute

\[ \frac{d}{dt}{E_{\rho }}(-\alpha {t^{\rho }})=-\alpha {t^{\rho -1}}{E_{\rho ,\rho }}(-\alpha {t^{\rho }}),\]

where ${E_{\rho ,\rho }}(x)$ is the two-parametric Mittag-Leffler function; recalling the bound (13), we get

\[ \left|\frac{d}{dt}{E_{\rho }}(-\alpha {t^{\rho }})\right|\le \alpha {t^{\rho -1}}\frac{{M_{2}}}{1+\alpha {t^{\rho }}},\]

which implies the bound

\[ \left|\frac{d}{dt}{E_{\rho }}(-\alpha {t^{\rho }})\right|\le M{\alpha ^{1/\rho }}\underset{\le 1}{\underbrace{\underset{x>0}{\sup }\frac{{x^{\rho -1}}}{1+{x^{\rho }}}}}.\]

Since α has a Gamma distribution of parameters μ and λ, we compute

\[\begin{aligned}{}\bar{\mathbb{E}}[{\alpha ^{1/\rho }}]& =\frac{1}{\Gamma (\mu )}{\int _{0}^{\infty }}{x^{1/\rho }}\lambda {e^{-\lambda x}}{\left(\lambda x\right)^{\mu -1}}\hspace{0.1667em}\mathrm{d}x\\ {} & =\frac{1}{{\lambda ^{1/\rho }}\Gamma (\mu )}{\int _{0}^{\infty }}\lambda {e^{-\lambda x}}{\left(\lambda x\right)^{\mu +1/\rho -1}}\hspace{0.1667em}\mathrm{d}x=\frac{\Gamma (\mu +1/\rho )}{{\lambda ^{1/\rho }}\Gamma (\mu )}.\end{aligned}\]

Therefore, we get

\[ \underset{t\in [0,T]}{\sup }\left|\dot{{f_{n}}}(t)\right|\le M\hspace{0.1667em}\frac{1}{n}{\sum \limits_{k=1}^{n}}{\left({\alpha _{k}}\right)^{1/\rho }};\]

by the law of large numbers in the space $(\bar{\Omega },\bar{\mathbb{P}})$ we obtain that

\[ \frac{1}{n}{\sum \limits_{k=1}^{n}}{\left({\alpha _{k}}\right)^{1/\rho }}\to \bar{\mathbb{E}}[{\alpha ^{1/\rho }}]<\infty ,\]

hence there exists a constant $C=C(\bar{\omega })$, finite $\bar{\mathbb{P}}$-almost surely, such that

By another application of the law of large numbers we get that the sequence

\[ \dot{{f_{n}}}(t)=\frac{1}{n}{\sum \limits_{k=1}^{n}}\frac{\mathrm{d}}{\mathrm{d}t}{s_{{\alpha _{k}}}}(t)\]

converges to ${G_{\rho ,\rho }^{\mu }}(t)=\bar{\mathbb{E}}\left[-\alpha {t^{\rho -1}}{E_{\rho ,\rho }}(-\alpha {t^{\rho }})\right]$. Since ${G_{\rho }^{\mu }}({t^{\rho }}/\lambda )=\bar{\mathbb{E}}\left[{E_{\rho }}(-\alpha {t^{\rho }})\right]$, taking the derivative inside the expectation we obtain that

that is the pointwise convergence required in condition 2.4 Asymptotic behaviour and stationary solutions

In this section we discuss the asymptotic behaviour as $t\to \infty $ of the stochastic process $Y(t)$ defined in (7). We already know that $Y(t)$ is a Gaussian process with zero mean and variance given by

\[ {\sigma _{t}^{2}}={\int _{0}^{t}}{\left|{G_{\rho }^{\mu }}(-{u^{\rho }}/\lambda )\right|^{2}}\hspace{0.1667em}\mathrm{d}u={\int _{0}^{t}}\bar{\mathbb{E}}{[{s_{\alpha }}(u)]^{2}}\hspace{0.1667em}\mathrm{d}u.\]

We are interested in the convergence of the laws $\mathcal{L}(Y(t))$ as $t\to \infty $.Remark 2.

By a direct computation, we see that the random variable ${\alpha ^{-1/\rho }}$ belongs to ${L^{2}}(\bar{\Omega },\bar{\mathbb{P}})$ if and only if $\mu -2\rho >0$, i.e. condition (18) holds.

Theorem 4.

Assume that α has a Gamma distribution with parameters $\mu >\frac{1}{2\rho }$ and $\lambda >0$. There exists a Gaussian random variable η such that $Y(t)$ converges in law to η as $t\to \infty $.

We first extend the Wiener process $W(t)$ to a process with time $t\in \mathbb{R}$. Let $\tilde{W}(t)$ be a standard Brownian motion on the space $(\Omega ,\mathcal{F},\mathbb{P})$, independent of $W(t)$, and set for $t\ge 0$ $W(-t)=\tilde{W}(t)$.

Now, for any $-s<0$, define the stochastic convolution process

\[ {Y_{-s}}(t)={\int _{-s}^{t}}\bar{\mathbb{E}}[{s_{\alpha }}(t-u)]\hspace{0.1667em}\mathrm{d}W(u).\]

It is easily seen that $\mathcal{L}({Y_{-s}}(0))=\mathcal{L}(Y(s))$, for $s\ge 0$.In next lemma we prove that $\{{Y_{-s}}(0)\}$ forms a Cauchy sequence in the space ${L^{2}}(\Omega ,\mathbb{P})$.

Lemma 7.

Under our assumptions, for any $\varepsilon >0$ there exists $T>0$ such that, for any $t>s>T$, $\mathbb{E}{\left|{Y_{-t}}(0)-{Y_{-s}}(0)\right|^{2}}\le \varepsilon $.

Proof.

Recall that

is a centred Gaussian process, where ${s_{\alpha }}(t)={E_{\rho }}(-\alpha {t^{\rho }})$ satisfies the bound

In order to prove the mean square convergence of ${Y_{-t}}(0)$ as $t\to \infty $, we need to prove that the quantity $\mathbb{E}[{\left|{Y_{-t}}(0)-{Y_{-s}}(0)\right|^{2}}]$ is bounded by a quantity that goes to 0 as $T\to \infty $, for $t>s>T$.

We estimate

\[\begin{array}{c}\displaystyle \mathbb{E}[{\left|{Y_{-t}}(0)-{Y_{-s}}(0)\right|^{2}}]\\ {} \displaystyle ={\int _{-s}^{-t}}{\left|\bar{\mathbb{E}}[{s_{\alpha }}(-u)]\right|^{2}}\hspace{0.1667em}\mathrm{d}u{\int _{s}^{t}}{\left(\bar{\mathbb{E}}\left|{s_{\alpha }}(u)\right|\right)^{2}}\hspace{0.1667em}\mathrm{d}u{\int _{s}^{t}}{\left(\bar{\mathbb{E}}\left|\frac{M}{1+\alpha {u^{\rho }}}\right|\right)^{2}}\hspace{0.1667em}\mathrm{d}u.\end{array}\]

Since α has a Gamma distribution with parameters μ and λ, we have

\[\begin{aligned}{}\mathbb{E}[|& {Y_{-t}}(0)-{Y_{-s}}(0){|^{2}}]\\ {} & \le {\int _{s}^{t}}{\left({\int _{0}^{\infty }}\frac{M}{1+y{u^{\rho }}}\lambda {(\lambda y)^{\mu -1}}{e^{-\lambda y}}\hspace{0.1667em}\mathrm{d}y\right)^{2}}\hspace{0.1667em}\mathrm{d}u\\ {} & \stackrel{[z=\lambda y]}{=}\hspace{2.5pt}{\int _{s}^{t}}{\left(\frac{1}{\Gamma (\mu )}{\int _{0}^{\infty }}\frac{M}{1+z\frac{{u^{\rho }}}{\lambda }}{z^{\mu -1}}{e^{-z}}\hspace{0.1667em}\mathrm{d}z\right)^{2}}\hspace{0.1667em}\mathrm{d}u\\ {} & \le \frac{{M^{2}}}{\Gamma {(\mu )^{2}}}{\int _{s}^{t}}{\left({\int _{0}^{1}}\frac{1}{1+z\frac{{u^{\rho }}}{\lambda }}{z^{\mu -1}}{e^{-z}}\hspace{0.1667em}\mathrm{d}z+{\int _{1}^{\infty }}\frac{1}{1+z\frac{{u^{\rho }}}{\lambda }}{z^{\mu -1}}{e^{-z}}\hspace{0.1667em}\mathrm{d}z\right)^{2}}\hspace{0.1667em}\mathrm{d}u\\ {} & \le \frac{{M^{2}}}{\Gamma {(\mu )^{2}}}{\int _{s}^{t}}{\left({\int _{0}^{1}}\frac{1}{1+z\frac{{u^{\rho }}}{\lambda }}{z^{\mu -1}}\hspace{0.1667em}\mathrm{d}z+{\int _{1}^{\infty }}\frac{1}{\frac{{u^{\rho }}}{\lambda }}{z^{\mu -1}}{e^{-z}}\hspace{0.1667em}\mathrm{d}z\right)^{2}}\hspace{0.1667em}\mathrm{d}u\\ {} & \le \frac{{M^{2}}}{\Gamma {(\mu )^{2}}}{\int _{s}^{t}}{\left({F_{2,1}}(1,\mu ,1+\mu ,-\frac{{u^{\rho }}}{\lambda })+\Gamma (\mu )\frac{\lambda }{{u^{\rho }}}\right)^{2}}\hspace{0.1667em}\mathrm{d}u,\end{aligned}\]

where ${F_{2,1}}$ is the hypergeometric function; notice that, for large u, we have

\[ {F_{2,1}}(1,\mu ,1+\mu ,-\frac{{u^{\rho }}}{\lambda })\sim \left\{\begin{array}{l@{\hskip10.0pt}l}{c_{\lambda ,\mu }}{u^{-\mu \rho }},\hspace{2em}\hspace{1em}& 0<\mu <1,\\ {} {c_{\lambda ,1}}\frac{\log (u)}{u},\hspace{2em}\hspace{1em}& \mu =1,\\ {} {c_{\lambda ,\mu }}{u^{-\rho }},\hspace{2em}\hspace{1em}& \mu >1,\end{array}\right.\]

hence

\[\begin{array}{c}\displaystyle \mathbb{E}[{\left|{Y_{-t}}(0)-{Y_{-s}}(0)\right|^{2}}]\\ {} \displaystyle \le {C_{M,\mu ,\lambda ,\rho }}\left({\int _{s}^{t}}\left\{{u^{-2\rho }}{\mathbb{1}_{\mu >1}}+\frac{\log {(u)^{2}}}{{u^{2}}}{\mathbb{1}_{\mu =1}}+{u^{-2\mu \rho }}{\mathbb{1}_{0<\mu <1}}\right\}\hspace{0.1667em}\mathrm{d}u\right)\end{array}\]

and the right hand side is bounded, for every $\mu >\frac{1}{2\rho }$, for every $t>s>T$, by $C\hspace{0.1667em}{T^{1-2\rho (\mu \wedge 1)}}$ (for $\mu =1$, the bound is given by $\frac{{(2+\log (T))^{2}}}{T}$); under the assumption $\mu >\frac{1}{2\rho }$, the exponent is always negative, hence the quantity $\mathbb{E}[{\left|{Y_{-t}}(0)-{Y_{-s}}(0)\right|^{2}}]$ can be taken arbitrarily small by choosing T large enough. □Proof of Theorem 4.

The result follows from the previous lemma. We have seen that $\{{Y_{-t}}(0)\}$ is a Cauchy sequence, hence it converges in ${L^{2}}(\Omega ,\mathbb{P})$ to some random variable η.

Now, a simple modification of the computations leading to Lemma 7 implies that

and the stability of the Gaussian distribution with respect to the convergence in law implies that η has a Gaussian distribution as well, with variance

\[ {\sigma ^{2}}=\underset{t>0}{\sup }\mathbb{E}\left[|{Y_{-t}}(0){|^{2}}\right]=\underset{t\to \infty }{\lim }\mathbb{E}\left[|{Y_{-t}}(0){|^{2}}\right].\]

But, as already stated above, $Y(t)\sim {Y_{-t}}(0)$, and we obtain the thesis. □Let us consider the following modification of our setting. Let $W(t)$ be a two-side Wiener process, and define

where, as opposite to (5), we are taking the integral on an infinite time interval $(-\infty ,t)$.

Proof.

The only point worth some care is the fact that the variance is finite. This follows from the computation in [6], see in particular Remark 3.2 therein, where it is proved that

□

Theorem 5.

Assume that condition (18) holds. Then the process

is a stationary Gaussian process with $\mathcal{L}(\eta (t))=\mathcal{L}(\eta )\sim \mathcal{N}(0,{\sigma ^{2}})$, where η is the random variable defined in Theorem 4.

(19)

\[ \eta (t)={\int _{-\infty }^{t}}{G_{\rho }^{\mu }}(-{(t-s)^{\rho }}/\lambda )\hspace{0.1667em}\mathrm{d}W(s)\]Theorem 6.

Consider the empirical mean processes ${\eta _{n}}(t)$, defined by

Then, for every $t\in \mathbb{R}$, the sequence ${\eta _{n}}(t)$ converges in ${L^{2}}(\Omega ,\mathbb{P})$ as $n\to \infty $ to the random variable $\eta (t)$ defined in (19), $\bar{\mathbb{P}}$-almost surely.

Before we provide the proof, let us recall a few known results about convergence of a sequence of Gaussian random variables (see, e.g., [1]).

Lemma 8.

Assume that $\{{X_{n}}\}$ is a sequence of Gaussian random variables converging in law to a random variable X. Then X is also a Gaussian random variable.

Assume that ${X_{n}}\sim \mathcal{N}({m_{n}},{\sigma _{n}^{2}})$ for every n and that ${m_{n}}\to m$ and ${\sigma _{n}^{2}}\to {\sigma ^{2}}$ as $n\to \infty $. Then ${X_{n}}$ converges in law to $X\sim \mathcal{N}(m,{\sigma ^{2}})$.

Assume further that ${\sigma ^{2}}=0$. Then the convergence holds also in probability and in ${L^{2}}$, hence the convergence holds in ${L^{p}}$ for every $p>0$.

Proof of Theorem 6.

By definition, the difference $\eta (t)-{\eta _{n}}(t)$ has a Gaussian distribution with zero mean and variance

holds $\bar{\mathbb{P}}$-a.s.

\[\begin{array}{c}\displaystyle {\delta _{n}^{2}}={\int _{-\infty }^{t}}{\left[{G_{\rho }^{\mu }}(-{(t-u)^{\rho }}/\lambda )-\frac{1}{n}{\sum \limits_{k=1}^{n}}{s_{{\alpha _{k}}}}(t-u)\right]^{2}}\hspace{0.1667em}\mathrm{d}u\\ {} \displaystyle ={\int _{0}^{\infty }}{\left[\bar{\mathbb{E}}[{s_{\alpha }}(u)]-\frac{1}{n}{\sum \limits_{k=1}^{n}}{s_{{\alpha _{k}}}}(u)\right]^{2}}\hspace{0.1667em}\mathrm{d}u.\end{array}\]

Taking into account the results in Lemma 8, the claim of the theorem follows by proving that

(20)

\[ \underset{n\to \infty }{\lim }{\int _{0}^{\infty }}{\left|\frac{1}{n}{\sum \limits_{k=1}^{n}}{s_{{\alpha _{k}}}}(t)-\bar{\mathbb{E}}[{s_{\alpha }}(t)]\right|^{2}}\hspace{0.1667em}\mathrm{d}t=0\]As opposed to Theorem 1, here we deal with an infinite horizon convergence problem. However, the uniform law of large numbers [16, Lemma 2.4] implies that for every $m\in \mathbb{N}$ there exists a negligible set ${N_{m}}\subset \bar{\Omega }$ such that the convergence

\[ \underset{t\in [m,m+1]}{\sup }\left|\frac{1}{n}{\sum \limits_{k=1}^{n}}{s_{{\alpha _{k}}}}(t)-\bar{\mathbb{E}}[{s_{\alpha }}(t)]\right|\to 0\]

holds outside ${N_{m}}$.By setting $N={\textstyle\bigcup _{m}}{N_{m}}$ we have $\bar{\mathbb{P}}(N)=0$ and the pointwise convergence

\[ \left|\frac{1}{n}{\sum \limits_{k=1}^{n}}{s_{{\alpha _{k}}}}(t)-\bar{\mathbb{E}}[{s_{\alpha }}(t)]\right|\to 0\]

for $t\ge 0$, $\bar{\mathbb{P}}$-a.s.It remains to prove a dominated convergence theorem which holds $\bar{\mathbb{P}}$-a.s. For this purpose, let us define

which satisfies, by estimate (11),

For simplicity, we split the estimate in two parts as follows:

and, for some $\varepsilon >0$ to be chosen later, an explicit computation leads to

\[ |{f_{n}}(t)|\le C(M,\varepsilon ,\rho )\frac{1}{n}{\sum \limits_{k=1}^{n}}{\alpha _{k}^{-(1+\varepsilon )/2\rho }}\hspace{0.1667em}{t^{-(1+\varepsilon )/2}},\hspace{2em}t\ge 1,\]

where $C(M,\varepsilon ,\rho )$ is a constant depending only on the stated quantities. The sequence of random variables $\{{\alpha _{k}^{-(1+\varepsilon )/2\rho }}\}$ satisfies the law of large numbers provided that each of its term is integrable, i.e. under the condition

This is a nonempty interval thanks to the inequality in (8).Therefore, outside an event of zero measure we have, eventually in n,

\[ |{f_{n}}(t)|\le f(t):=C(M,\varepsilon ,\rho )\left(\bar{\mathbb{E}}[{\alpha ^{-(1+\varepsilon )/2\rho }}]+1\right)\hspace{0.1667em}{t^{-(1+\varepsilon )/2}},\hspace{2em}t\ge 1,\]

and the function $f(t)$ is square integrable on ${\mathbb{R}_{+}}$.In order to conclude the proof, it remains to apply the dominated convergence theorem to the integrals in (20). The sequence of functions

\[ {\left|\frac{1}{n}{\sum \limits_{k=1}^{n}}s({\alpha _{k}},t)-\bar{\mathbb{E}}[s(\alpha ,t)]\right|^{2}}\]

converges pointwise to 0 and its dominated by the integrable function

(compare with (14)). Then Lebesgue’s dominated convergence theorem implies that the limit in (20) holds. □The next, and final, step is to prove that the weak convergence of the sequence of processes ${\eta _{n}}$ to η holds in the space $C([0,T])$ of continuous functions on $[0,T]$, for fixed $T>0$.

Theorem 7.

The sequence ${\eta _{n}}$ $\mathbb{P}$-weakly converges to the process η in the space $C([0,T])$, $\bar{\mathbb{P}}$-almost surely.

Notice that the proof is quite similar to that of Theorem 2: a consequence of the convergence of finite dimensional distributions and tightness of the laws. In the sequel we shall only emphasise the differences in computations.

The convergence of the finite dimensional distributions follows from the fact that all the processes involved are Gaussian and thus we have established ${L^{2}}$ and pointwise convergence of ${\eta _{n}}(t)$ to $\eta (t)$ for all t, as in Lemma 4.

The next result is the analogue of Lemma 5 and deals with the tightness estimate corresponding to (17).

Proof.

By an analysis of the proof of Lemma 5, we see that the key point is to estimate the quantity

\[\begin{aligned}{}\mathbb{E}[|\xi (t)-& \xi (s){|^{2}}]\\ {} & =\mathbb{E}{\left|{\int _{-\infty }^{s}}[{s_{\alpha }}(t-u)-{s_{\alpha }}(s-u)]\hspace{0.1667em}\mathrm{d}W(u)+{\int _{s}^{t}}{s_{\alpha }}(t-u)\hspace{0.1667em}\mathrm{d}W(u)\right|^{2}}\\ {} & \le 2{\int _{0}^{\infty }}{[{s_{\alpha }}(t-s+u)-{s_{\alpha }}(u)]^{2}}\hspace{0.1667em}\mathrm{d}u+2{\int _{0}^{t-s}}{[{s_{\alpha }}(u)]^{2}}\hspace{0.1667em}\mathrm{d}u\end{aligned}\]

and, in particular, the first term, where now the integration interval is ${\mathbb{R}_{+}}$. As far as the first term is concerned, we get

\[ {\int _{0}^{\infty }}{[{s_{\alpha }}(t-s+u)-{s_{\alpha }}(u)]^{2}}\hspace{0.1667em}\mathrm{d}u={\alpha ^{-1/\rho }}{\int _{0}^{\infty }}{\left|{\int _{v}^{v+{\alpha ^{1/\rho }}(t-s)}}{s^{\prime }_{1}}(x)\hspace{0.1667em}\mathrm{d}x\right|^{2}}\hspace{0.1667em}\mathrm{d}v.\]

We recall the bound

\[ \left|{s^{\prime }_{1}}(x)\right|\le {x^{\rho -1}}\frac{{M_{2}}}{1+{x^{\rho }}}\le \frac{{M_{3}}}{1+x},\]

and divide the integral in two parts, for small v (say, $v\in [0,1]$) and for large v (say $v\ge 1$). We thus estimate the right hand side of the previous estimate by

\[\begin{array}{c}\displaystyle R={\alpha ^{-1/\rho }}{\int _{0}^{1}}{\left|{M_{3}}{\alpha ^{1/\rho }}(t-s)\right|^{2}}\hspace{0.1667em}\mathrm{d}v\\ {} \displaystyle +{M_{3}^{2}}{\alpha ^{-1/\rho }}{\int _{1}^{\infty }}{\left|\log (v+{\alpha ^{1/\rho }}(t-s))-\log (v)\right|^{2}}\hspace{0.1667em}\mathrm{d}v.\end{array}\]

Recalling that $\log (1+x)\le x$ for all $x>0$,

\[ R\le {M_{3}^{2}}{\alpha ^{1/\rho }}{(t-s)^{2}}+{M_{3}^{2}}{\alpha ^{-1/\rho }}{\int _{1}^{\infty }}{\left|\frac{{\alpha ^{1/\rho }}(t-s)}{v}\right|^{2}}\hspace{0.1667em}\mathrm{d}v\le 2{M_{3}^{2}}{\alpha ^{1/\rho }}{(t-s)^{2}}.\]

In conclusion, we get $\mathbb{E}[|\xi (t)-\xi (s){|^{2}}]\le [2{M_{3}^{2}}{\alpha ^{2/\rho }}{T^{2}}+2{M^{2}}](t-s)$, which further implies $\mathbb{E}[|{\eta _{n}}(t)-{\eta _{n}}(s){|^{2}}]\le {K_{n}}(t-s)$ and, finally, we obtain

□