1 Introduction

We consider a functional linear error-in-variables model. Let $\{{a_{i}^{0}},\hspace{0.2778em}i\ge 1\}$ be a sequence of unobserved nonrandom n-dimensional vectors. The elements of the vectors are true explanatory variables or (in other terminology) true regressors. We observe m n-dimensional random vectors ${a_{1}},\dots ,{a_{m}}$ and m d-dimensional random vectors ${b_{1}},\dots ,{b_{m}}$. They are thought to be true vectors ${a_{i}^{0}}$ and ${X_{0}^{\top }}{a_{i}^{0}}$, respectively, plus additive errors:

where ${\tilde{a}_{i}}$ and ${\tilde{b}_{i}}$ are random measurement errors in the regressor and in the response. A nonrandom matrix ${X_{0}}$ is estimated based on observations ${a_{i}}$, ${b_{i}}$, $i=1,\dots ,m$.

(1)

\[ \left\{\begin{array}{l}{b_{i}}={X_{0}^{\top }}{a_{i}^{0}}+{\tilde{b}_{i}},\hspace{1em}\\{} {a_{i}}={a_{i}^{0}}+{\tilde{a}_{i}},\hspace{1em}\end{array}\right.\]This problem is related to finding an approximate solution to incompatible linear equations (“overdetermined” linear equation, because the number of equations exceeds the number of variables)

where $A={[{a_{1}},\dots ,{a_{m}}]}^{\top }$ is an $m\times n$ matrix and $B={[{b_{1}},\dots ,{b_{m}}]}^{\top }$ is an $m\times d$ matrix. Here X is an unknown $n\times d$ matrix.

In the linear error-in-variables regression model (1), the Total Least Squares (TLS) estimator in widely used. It is a multivariate equivalent to the orthogonal regression estimator. We are looking for conditions that provide consistency or strong consistency of the estimator. It is assumed (for granted) that the measurement errors ${\tilde{c}_{i}}=(\begin{array}{c}{\tilde{a}_{i}}\\{} {\tilde{b}_{i}}\end{array})$, $i=1,2,\dots $, are independent and have the same covariance matrix Σ. It may be singular. In particular, some of regressors may be observed without errors. (If the matrix Σ is nonsingular, the proofs can be simplified.) An intercept can be introduced into (1) by augmenting the model and inserting a constant error-free regressor.

Sufficient conditions for consistency of the estimator are presented in Gleser [5], Gallo [4], Kukush and Van Huffel [10]. In [18], the consistency results are obtained under less restrictive conditions than in [10]. In particular, there is no requirement that

\[ \frac{{\lambda _{\min }^{2}}({A_{0}^{\top }}{A_{0}})}{{\lambda _{\max }}({A_{0}^{\top }}{A_{0}})}\to \infty \hspace{1em}\text{as}\hspace{1em}m\to \infty ,\]

where ${A_{0}}={[{a_{1}^{0}},\dots ,{a_{m}^{0}}]}^{\top }$ is the matrix A without measurement errors. Hereafter, ${\lambda _{\min }}$ and ${\lambda _{\max }}$ denotes the minimum and maximum eigenvalues of a matrix if all the eigenvalues are real numbers. The matrix ${A_{0}^{\top }}{A_{0}^{}}$ is symmetric (and positive semidefinite). Hence, its eigenvalues are real (and nonnegative).The model where some variables are explanatory and the other are response is called explicit. The alternative is the implicit model, where all the variables are treated equally. In the implicit model, the n-dimensional linear subspace in ${\mathbb{R}}^{n+d}$ is fitted to an observed set of points. Some n-dimensional subspaces can be represented in a form $\{(a,b)\in {\mathbb{R}}^{n+d}:b={X}^{\top }a\}$ for some $n\times d$ matrix X; such subspaces are called generic. The other subspaces are called non-generic. The true points lie on a generic subspace $\{(a,b):b={X_{0}^{\top }}a\}$. A consistently estimated subspace must be generic with high probability. We state our results for the explicit model, but use the ideas of the implicit model in the definition of the estimator, as well as in proofs.

We allow errors in different variables to correlate. Our problem is a minor generalization of the mixed LS-TLS problem, which is studied in [20, Section 3.5]. In the latter problem, some explanatory variables are observed without errors; the other explanatory variables and all the response variables are observed with errors. The errors have the same variance and are uncorrelated. The basic LS model (where the explanatory variables are error-free, and the response variables are error-ridden) and the basic TLS model (where all the variables are observed with error, and the errors are uncorrelated) are marginal cases of the mixed LS-TLS problem. By a linear transformation of variables our model can be transformed into either a mixed LS-TLS or basic LS or basic TLS problem. (We do not handle the case where there are more error-free variables than explanatory variables.) Such a transformation does not always preserve the sets of generic and non-generic subspaces. The mixed LS-TLS problem can be transformed into the basic TLS problem as it is shown in [6].

The Weighted TLS and Structured TLS estimators are generalizations of the TLS estimator for the cases where the error covariance matrices do not coincide for different observations or where the errors for different observations are dependent; more precisely, the independence condition is replaced with the condition on the “structure of the errors”. The consistency of these estimators is proved in Kukush and Van Huffel [10] and Kukush et al. [9]. Relaxing conditions for consistency of the Weighted TLS and Structured TLS estimators is an interesting topic for a future research. For generalizations of the TLS problem, see the monograph [13] and the review [12].

In the present paper, for a multivariate regression model with multiple response variables we consider two versions of the TLS estimator. In these estimators, different norms of the weighted residual matrix are minimized. (These estimators coincide for the univariate regression model.) The common way to construct the estimator is to minimize the Frobenius norm. The estimator that minimizes the Frobenius norm also minimizes the spectral norm. Any estimator that minimizes the spectral norm is consistent under conditions of our consistency theorems (see Theorems 3.5–3.7 in Section 3). We also provide a sufficient condition for uniqueness of the estimator that minimizes the Frobenius norm.

In this paper, for the results on consistency of the TLS estimator which are stated in paper [18], we provide complete and comprehensive proofs and present all necessary auxiliary and complementary results. For convenience of the reader we first present the sketch of proof. Detailed proofs are postponed to the appendix. Moreover, the paper contains new results on the relation between the TLS estimator and the generalized eigenvalue problem.

The structure of the paper is as follows. In Section 2 we introduce the model and define the TLS estimator. The consistency theorems for different moment conditions on the errors and for different senses of consistency are stated in Section 3, and their proofs are sketched in Section 5. Section 4 states the existence and uniqueness of the TLS estimator. Auxiliary theoretical constructions and theorems are presented in Section 6. Section 7 explains the relationship between the TLS estimator and the generalized eigenvalue problem. The results in Section 7 are used in construction of the TLS estimator and in the proof of its uniqueness. Detailed proofs are moved to the appendix (Section 8).

Notations

At first, we list the general notation. For $v\hspace{0.1667em}=\hspace{0.1667em}{({x_{k}})_{k=1}^{n}}$ being a vector, $\| v\| \hspace{0.1667em}=\hspace{0.1667em}\sqrt{{\sum _{k=1}^{n}}{x_{k}^{2}}}$ is the 2-norm of v.

For $M={({x_{i,j}})_{i=1}^{m{_{}^{}}}}$ being an $m\times n$ matrix, $\| M\| ={\max _{v\ne 0}}\frac{\| Mv\| }{\| v\| }={\sigma _{\max }}(M)$ is the spectral norm of M; $\| M{\| _{F}}=\sqrt{{\sum _{i=1}^{m}}{\sum _{j=1}^{n}}{x_{i,j}^{2}}}$ is the Frobenius norm of M; ${\sigma _{\max }}(M)={\sigma _{1}}(M)\ge {\sigma _{2}}(M)\ge \cdots \ge {\sigma _{\min (m,n)}}(M)\ge 0$ are the singular values of M, arranged in descending order; $\operatorname{span}\langle M\rangle $ is the column space of M; $\operatorname{rk}M$ is the rank of M. For a square $n\times n$ matrix M, $\operatorname{def}M=n-\operatorname{rk}M$ is rank deficiency of M; $\operatorname{tr}M={\sum _{i=1}^{n}}{x_{i,i}}$ is the trace of M; ${\chi _{M}}(\lambda )=\det (M-\lambda I)$ is the characteristic polynomial of M. If M is an $n\times n$ matrix with real eigenvalues (e.g., if M is Hermitian or if M admits a decomposition $M=AB$, where A and B are Hermitian matrices, and either A or B is positive semidefinite), ${\lambda _{\min }}(M)={\lambda _{1}}(M)\le {\lambda _{2}}(M)\le \cdots \le {\lambda _{n}}(M)={\lambda _{\max }}(M)$ are eigenvalues of M arranged in ascending order.

For ${V_{1}}$ and ${V_{2}}$ being linear subspaces of ${\mathbb{R}}^{n}$ of equal dimension $\dim {V_{1}}=\dim {V_{2}}$, $\| \sin \angle ({V_{1}},{V_{2}})\| =\| {P_{{V_{1}}}}-{P_{{V_{2}}}}\| =\| {P_{{V_{1}}}}(I-{P_{{V_{2}}}})\| $ is the greatest sine of the canonical angles between ${V_{1}}$ and ${V_{2}}$. See Section 6.2 for more general definitions.

Now, list the model-specific notations. The notations (except for the matrix Σ) come from [9]. The notations are listed here only for reference; they are introduced elsewhere in this paper – in Sections 1 and 2.

n is the number of regressors, i.e., the number of explanatory variables for each observation; d is the number of response variables for each observation; m is the number of observations, i.e., the sample size.

While in consistency theorems m tends to ∞, all matrices in this list except Σ, ${X_{0}}$ and ${X_{\mathrm{ext}}^{0}}$ silently depend on m. For example, in equations “${\lim _{m\to \infty }}{\lambda _{\min }}({A_{0}^{\top }}{A_{0}})=+\infty $” and “$\widehat{X}\to {X_{0}}$ almost surely” the matrices ${A_{0}}$ and $\widehat{X}$ depend on m.

While in consistency theorems m tends to ∞, all matrices in this list except Σ, ${X_{0}}$ and ${X_{\mathrm{ext}}^{0}}$ silently depend on m. For example, in equations “${\lim _{m\to \infty }}{\lambda _{\min }}({A_{0}^{\top }}{A_{0}})=+\infty $” and “$\widehat{X}\to {X_{0}}$ almost surely” the matrices ${A_{0}}$ and $\widehat{X}$ depend on m.

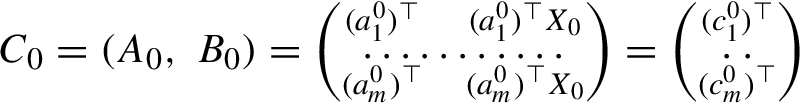

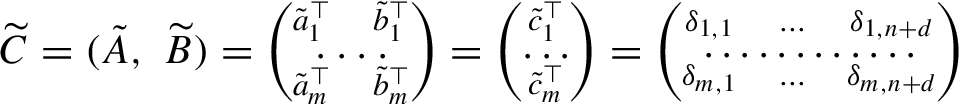

is the matrix of true variables. It is an $m\times (n+d)$ nonrandom matrix. The left-hand block ${A_{0}}$ of size $m\times n$ consists of true explanatory variables, and the right-hand block ${B_{0}}$ of size $m\times d$ consists of true response variables.

is the matrix of errors. It is an $m\times (n+d)$ random matrix.

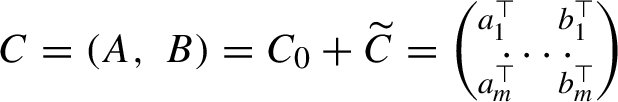

is the matrix of observations. It is an $m\times (n+d)$ random matrix.

Σ

is a covariance matrix of errors for one observation. For every i, it is assumed that $\mathbb{E}{\tilde{c}_{i}}=0$ and $\mathbb{E}{\tilde{c}_{i}}{\tilde{c}_{i}^{\top }}=\varSigma $. The matrix Σ is symmetric, positive semidefinite, nonrandom, and of size $(n+d)\times (n+d)$. It is assumed known when we construct the TLS estimator.

${X_{0}}$

is the matrix of true regression parameters. It is a nonrandom $n\times d$ matrix and is a parameter of interest.

${X_{\mathrm{ext}}^{0}}=\left(\genfrac{}{}{0.0pt}{}{{X_{0}}}{-I}\right)$

is an augmented matrix of regression coefficients. It is a nonrandom $(n+d)\times d$ matrix.

$\widehat{X}$

is the TLS estimator of the matrix ${X_{0}}$.

${\widehat{X}_{\mathrm{ext}}}$

is a matrix whose column space $\operatorname{span}\langle {\widehat{X}_{\mathrm{ext}}}\rangle $ is considered an estimator of the subspace $\operatorname{span}\langle {X_{\mathrm{ext}}^{0}}\rangle $. The matrix ${\widehat{X}_{\mathrm{ext}}}$ is of size $(n+d)\times d$. For fixed m and Σ, ${\widehat{X}_{\mathrm{ext}}}$ is a Borel measurable function of the matrix C.

2 The model and the estimator

2.1 Statistical model

It is assumed that the matrices ${A_{0}}$ and ${B_{0}}$ satisfy the relation

They are observed with measurement errors $\tilde{A}$ and $\widetilde{B}$, that is

The matrix ${X_{0}}$ is a parameter of interest.

(2)

\[ \underset{m\times n}{{A_{0}}}\cdot \underset{n\times d}{{X_{0}}}=\underset{m\times d}{{B_{0}}}.\]Rewrite the relation in an implicit form. Let the $m\times (n+d)$ block matrices ${C_{0}},\widetilde{C},C\in {\mathbb{R}}^{m\times (n+d)}$ be constructed by binding “respective versions” of matrices A and B:

\[ {C_{0}}=[{A_{0}}\hspace{2.5pt}{B_{0}}],\hspace{2em}\widetilde{C}=[\tilde{A}\hspace{2.5pt}\widetilde{B}],\hspace{2em}C=[A\hspace{2.5pt}B].\]

Denote ${X_{\mathrm{ext}}^{0}}=(\begin{array}{c}{X_{0}}\\{} -{I_{d}}\end{array})$. Then

The entries of the matrix $\widetilde{C}$ are denoted ${\delta _{ij}}$; the rows are ${\tilde{c}_{i}}$:

Throughout the paper the following three conditions are assumed to be true:

(4)

\[\begin{aligned}{}& \text{The rows}\hspace{2.5pt}{\tilde{c}_{i}}\hspace{2.5pt}\text{of the matrix}\hspace{2.5pt}\widetilde{C}\hspace{2.5pt}\text{are mutually independent random vectors.}\end{aligned}\](5)

\[\begin{aligned}{}& \mathbb{E}\widetilde{C}=0\text{, and}\hspace{2.5pt}\mathbb{E}{\tilde{c}_{i}^{}}{\tilde{c}_{i}^{\top }}:={(\mathbb{E}{\delta _{ij}}{\delta _{ik}})_{i=1,\hspace{0.1667em}\hspace{0.1667em}k=1}^{n+d\hspace{0.1667em}\hspace{0.1667em}n+d}}=\varSigma \hspace{2.5pt}\text{for all}\hspace{2.5pt}i=1,\dots ,m\text{.}\end{aligned}\]Example 2.1 (simple univariate linear regression with intercept).

For $i=1,\dots ,m$

\[ \left\{\begin{array}{l}{x_{i}}={\xi _{i}}+{\delta _{i}};\hspace{1em}\\{} {y_{i}}={\beta _{0}}+{\beta _{1}}{\xi _{i}}+{\varepsilon _{i}},\hspace{1em}\end{array}\right.\]

where the measurement errors ${\delta _{i}}$, ${\varepsilon _{i}}$, $i=1,\dots ,m$, – all the $2m$ variables – are uncorrelated, $\mathbb{E}{\delta _{i}}=0$, $\mathbb{E}{\delta _{i}^{2}}={\sigma _{\delta }^{2}}$, $\mathbb{E}{\varepsilon _{i}}=0$, and $\mathbb{E}{\varepsilon _{i}^{2}}={\sigma _{\varepsilon }^{2}}$. A sequence $\{({x_{i}},{y_{i}}),\hspace{2.5pt}i=1,\dots ,m\}$ is observed. The parameters ${\beta _{0}}$ and ${\beta _{1}}$ are to be estimated.This example is taken from [1, Section 1.1]. But the notation in Example 2.1 and elsewhere in the paper is different. Our notation is ${a_{i}^{0}}={(1,{\xi _{i}})}^{\top }$, ${b_{i}^{0}}={\eta _{i}}$, ${a_{i}}={(1,{x_{i}})}^{\top }$, ${b_{i}}={y_{i}}$, ${\delta _{i,1}}=0$, ${\delta _{i,2}}={\delta _{i}}$, ${\delta _{i,3}}={\varepsilon _{i}}$, $\varSigma =\operatorname{diag}(0,{\sigma _{\delta }^{2}},{\sigma _{\varepsilon }^{2}})$, and ${X_{0}}={({\beta _{0}},{\beta _{1}})}^{\top }$.

Remark 2.1.

For some matrices Σ, (6) is satisfied for any $n\times d$ matrix ${X_{0}}$. If the matrix Σ in nonsingular, then condition (6) is satisfied. If the errors in the explanatory variables and in the response are uncorrelated, i.e., if the matrix Σ has a block-diagonal form

\[ \varSigma =\left(\begin{array}{c@{\hskip10.0pt}c}{\varSigma _{aa}}& 0\\{} 0& {\varSigma _{bb}}\end{array}\right)\]

(where ${\varSigma _{aa}}=\mathbb{E}{\tilde{a}_{i}}{\tilde{a}_{i}^{\top }}$ and ${\varSigma _{bb}}=\mathbb{E}{\tilde{b}_{i}}{\tilde{b}_{i}^{\top }}$) with nonsingular matrix ${\varSigma _{bb}}$, then condition (6) is satisfied. For example, in the basic mixed LS-TLS problem Σ is diagonal, ${\varSigma _{bb}}$ is nonsingular, and so (6) holds true. If the null-space of the matrix Σ (which equals $\operatorname{span}{\langle \varSigma \rangle }^{\perp }$ because Σ is symmetric) lies inside the subspace spanned by the first n (of $n+d$) standard basis vectors, then condition (6) is also satisfied. On the other hand, if $\operatorname{rk}\varSigma <d$, then condition (6) is not satisfied.2.2 Total least squares (TLS) estimator

First, find the $m\times (n+d)$ matrix Δ for which the constrained minimum is attained

Hereafter ${\varSigma }^{\dagger }$ is the Moore–Penrose pseudoinverse matrix of the matrix Σ, ${P_{\varSigma }}$ is an orthogonal projector onto the column space of Σ, ${P_{\varSigma }}=\varSigma {\varSigma }^{\dagger }$.

(7)

\[ \left\{\begin{array}{l}\| \Delta \hspace{0.1667em}{({\varSigma }^{1/2})}^{\dagger }{\| _{F}}\to \min ;\hspace{1em}\\{} \Delta \hspace{0.1667em}(I-{P_{\varSigma }})=0;\hspace{1em}\\{} \operatorname{rk}(C-\Delta )\le n.\hspace{1em}\end{array}\right.\]Now, show that the minimum in (7) is attained. The constraint $\operatorname{rk}(C-\Delta )\le n$ is satisfied if and only if all the minors of $C-\Delta $ of order $n+1$ vanish. Thus the set of all Δ that satisfy the constraints (the constraint set) is defined by $\frac{m!(n+d)!}{(n+1){!}^{2}(m-n-1)!(d-1)!}+1$ algebraic equations; and so it is closed. The constraint set is nonempty almost surely because it contains $\widetilde{C}$. The functional $\| \Delta {\varSigma }^{\dagger }{\| _{F}}$ is a pseudonorm on ${\mathbb{R}}^{m\times (n+d)}$, but it is a norm on the linear subspace $\{\Delta :\Delta \hspace{0.1667em}(I-{\varSigma }^{\dagger })=0\}$, where it induces a natural subspace topology. The constraint set is closed on the subspace (with the norm), and whenever it is nonempty (i.e., almost surely), it has a minimal-norm element.

Notice that under condition (6) the constrain set is non-empty always and not just almost surely. This follows from Proposition 7.9.

For the matrix Δ that is a solution to minimization problem (7), consider the rowspace $\operatorname{span}\langle {(C-\Delta )}^{\top }\rangle $ of the matrix $C-\Delta $. Its dimension does not exceed n. Its orthogonal basis can be completed to the orthogonal basis in ${\mathbb{R}}^{n+d}$, and the complement consists of $n+d-\operatorname{rk}(C-\Delta )\ge d$ vectors. Choose d vectors from the complement, which are linearly independent, and bind them (as column-vectors) into $(n+d)\times d$ matrix ${\widehat{X}_{\mathrm{ext}}}$. The matrix ${\widehat{X}_{\mathrm{ext}}}$ satisfies the equation

If the lower $d\times d$ block of the matrix ${\widehat{X}_{\mathrm{ext}}}$ is a nonsingular matrix, by linear transformation of columns (i.e., by right-multiplying by some nonsingular matrix) the matrix ${\widehat{X}_{\mathrm{ext}}}$ can be transformed to the form

where I is $d\times d$ identity matrix. The matrix $\widehat{X}$ satisfies the equation

(Otherwise, if the lower block of the matrix ${\widehat{X}_{\mathrm{ext}}}$ is singular, then our estimation fails. Note that whether the lower block of the matrix ${\widehat{X}_{\mathrm{ext}}}$ is singular might depend not only on the observations C, but also on the choice of the matrix Δ where the minimum in (7) in attained and the d vectors that make matrix ${\widehat{X}_{\mathrm{ext}}}$. We will show that the lower block of the matrix ${\widehat{X}_{\mathrm{ext}}}$ is nonsingular with high probability regardless of the choice of Δ and ${\widehat{X}_{\mathrm{ext}}}$.)

Columns of the matrix ${\widehat{X}_{\mathrm{ext}}}$ should span the eigenspace (generalized invariant space) of the matrix pencil $\langle {C}^{\top }C,\varSigma \rangle $ which corresponds to the d smallest generalized eigenvalues. That the columns of the matrix ${\widehat{X}_{\mathrm{ext}}}$ span the generalized invariant space corresponding to finite generalized eigenvalues is written in the matrix notation as follows:

Possible problems that may arise in the course of solving the minimization problem (7) are discussed in [18]. We should mention that our two-step definition $\text{(7)}$ & $\text{(9)}$ of the TLS estimator is slightly different from the conventional definition in [20, Sections 2.3.2 and 3.2] or in [10]. In these papers, the problem from which the estimator $\widehat{X}$ is found is equivalent to the following:

where the optimization is performed for Δ and $\widehat{X}$ that satisfy the constraints in (10). If our estimation defined with (7) and (9) succeeds, then the minimum values in (7) and (10) coincide, and the minimum in (10) is attained for $(\Delta ,\widehat{X})$ that is the solution to (7) & (9). Conversely, if our estimation succeeds for at least one choice of Δ and ${\widehat{X}_{\mathrm{ext}}}$, then all the solutions to (10) can be obtained with different choices of Δ and ${\widehat{X}_{\mathrm{ext}}}$. However, strange things may happen if our estimation always fails.

(10)

\[ \left\{\begin{array}{l}\| \Delta \hspace{0.1667em}{({\varSigma }^{1/2})}^{\dagger }{\| _{F}}\to \min ;\hspace{1em}\\{} \Delta \hspace{0.1667em}(I-{P_{\varSigma }})=0;\hspace{1em}\\{} (C-\Delta )\left(\begin{array}{c}\widehat{X}\\{} -I\end{array}\right)=0,\hspace{1em}\end{array}\right.\]Besides (7), consider the optimization problem

It will be shown that every Δ that minimizes (7) also minimizes (11).

(11)

\[ \left\{\begin{array}{l}{\lambda _{\max }}(\Delta {\varSigma }^{\dagger }{\Delta }^{\top })\to \min ;\hspace{1em}\\{} \Delta \hspace{0.1667em}(I-{P_{\varSigma }})=0;\hspace{1em}\\{} \operatorname{rk}(C-\Delta )\le n.\hspace{1em}\end{array}\right.\]We can construct the optimization problem that generalizes both (7) and (11). Let $\| M{\| _{\mathrm{U}}}$ be a unitarily invariant norm on $m\times (n+d)$ matrices. Consider the optimization problem

Then every Δ that minimizes (7) also minimizes (12), and every Δ that minimizes (12) also minimizes (11). If $\| M{\| _{\mathrm{U}}}$ is the Frobenius norm, then optimization problems (7) and (12) coincide, and if $\| M{\| _{\mathrm{U}}}$ is the spectral norm, then optimization problems (11) and (12) coincide.

(12)

\[ \left\{\begin{array}{l}\| \Delta \hspace{0.1667em}{({\varSigma }^{1/2})}^{\dagger }{\| _{\mathrm{U}}}\to \min ;\hspace{1em}\\{} \Delta \hspace{0.1667em}(I-{P_{\varSigma }})=0;\hspace{1em}\\{} \operatorname{rk}(C-\Delta )\le n.\hspace{1em}\end{array}\right.\]3 Known consistency results

In this section we briefly revise known consistency results. One of conditions for the consistency of the TLS estimator is the convergence of $\frac{1}{m}{A_{0}^{\top }}{A_{0}}$ to a nonsingular matrix. It is required, for example, in [5]. The condition is relaxed in the paper by Gallo [4].

Theorem 3.1 (Gallo [4], Theorem 2).

Let $d=1$,

\[\begin{aligned}{}{m}^{-1/2}{\lambda _{\min }}\big({A_{0}^{\top }}{A_{0}}\big)& \to \infty \hspace{1em}\textit{as}\hspace{1em}m\to \infty ,\\{} \frac{{\lambda _{\min }^{2}}({A_{0}^{\top }}{A_{0}})}{{\lambda _{\max }}({A_{0}^{\top }}{A_{0}})}& \to \infty \hspace{1em}\textit{as}\hspace{1em}m\to \infty ,\end{aligned}\]

and the measurement errors ${\tilde{c}_{i}}$ are identically distributed, with finite fourth moment $\mathbb{E}\| {\tilde{c}_{i}}{\| }^{4}<\infty $. Then $\widehat{X}\stackrel{\mathrm{P}}{\longrightarrow }{X_{0}}$, $m\to \infty $.

The theorem can be generalized for the multivariate regression. The condition that the errors on different observations have the same distribution can be dropped. Instead, Kukush and Van Huffel [10] assume that the fourth moments of the error distributions are bounded.

Theorem 3.2 (Kukush and Van Huffel [10], Theorem 4a).

Let

\[\begin{aligned}{}\underset{\begin{array}{c}i\ge 1\\{} j=1,\dots ,n+d\end{array}}{\sup }\mathbb{E}|{\delta _{ij}}{|}^{4}& <\infty ,\\{} {m}^{-1/2}{\lambda _{\min }}\big({A_{0}^{\top }}{A_{0}}\big)& \to \infty \hspace{1em}\textit{as}\hspace{1em}m\to \infty ,\\{} \frac{{\lambda _{\min }^{2}}({A_{0}^{\top }}{A_{0}})}{{\lambda _{\max }}({A_{0}^{\top }}{A_{0}})}& \to \infty \hspace{1em}\textit{as}\hspace{1em}m\to \infty .\end{aligned}\]

Then $\widehat{X}\stackrel{\mathrm{P}}{\longrightarrow }{X_{0}}$ as $m\to \infty $.

Here is the strong consistency theorem:

Theorem 3.3 (Kukush and Van Huffel [10], Theorem 4b).

Let for some $r\ge 2$ and ${m_{0}}\ge 1$,

\[\begin{aligned}{}\underset{\begin{array}{c}i\ge 1\\{} j=1,\dots ,n+d\end{array}}{\sup }\mathbb{E}|{\delta _{ij}}{|}^{2r}& <\infty ,\\{} {\sum \limits_{m={m_{0}}}^{\infty }}{\bigg(\frac{\sqrt{m}}{{\lambda _{\min }}({A_{0}^{\top }}{A_{0}})}\bigg)}^{r}& <\infty ,\\{} {\sum \limits_{m={m_{0}}}^{\infty }}{\bigg(\frac{{\lambda _{\max }}({A_{0}^{\top }}{A_{0}})}{{\lambda _{\min }^{2}}({A_{0}^{\top }}{A_{0}})}\bigg)}^{r}& <\infty .\end{aligned}\]

Then $\widehat{X}\to {X_{0}}$ as $m\to \infty $, almost surely.

In the following consistency theorem the moment condition imposed on the errors is relaxed.

Theorem 3.4 (Kukush and Van Huffel [10], Theorem 5b).

Let for some r, $1\le r<2$,

\[\begin{aligned}{}\underset{\begin{array}{c}i\ge 1\\{} j=1,\dots ,n+d\end{array}}{\sup }\mathbb{E}|{\delta _{ij}}{|}^{2r}& <\infty ,\\{} {m}^{-1/r}{\lambda _{\min }}\big({A_{0}^{\top }}{A_{0}}\big)& \to \infty \hspace{1em}\textit{as}\hspace{1em}m\to \infty ,\\{} \frac{{\lambda _{\min }^{2}}({A_{0}^{\top }}{A_{0}})}{{\lambda _{\max }}({A_{0}^{\top }}{A_{0}})}& \to \infty \hspace{1em}\textit{as}\hspace{1em}m\to \infty .\end{aligned}\]

Then $\widehat{X}\stackrel{\mathrm{P}}{\longrightarrow }{X_{0}}$ as $m\to \infty $.

Generalizations of Theorems 3.2, 3.3, and 3.4 are obtained in [18]. An essential improvement is achieved. Namely, it is not required that ${\lambda _{\min }^{-2}}({A_{0}^{\top }}{A_{0}}){\lambda _{\max }}({A_{0}^{\top }}{A_{0}})$ converge to 0.

Theorem 3.5 (Shklyar [18], Theorem 4.1, generalization of Theorems 3.2 and 3.4).

Let for some r, $1\le r\le 2$,

\[\begin{aligned}{}\underset{\begin{array}{c}i\ge 1\\{} j=1,\dots ,n+d\end{array}}{\sup }\mathbb{E}|{\delta _{ij}}{|}^{2r}& <\infty ,\\{} {m}^{-1/r}{\lambda _{\min }}\big({A_{0}^{\top }}{A_{0}}\big)& \to \infty \hspace{1em}\textit{as}\hspace{1em}m\to \infty .\end{aligned}\]

Then $\widehat{X}\stackrel{\mathrm{P}}{\longrightarrow }{X_{0}}$ as $m\to \infty $.

Theorem 3.6 (Shklyar [18], Theorem 4.2, generalization of Theorem 3.3).

Let for some $r\ge 2$ and ${m_{0}}\ge 1$,

\[\begin{aligned}{}\underset{\begin{array}{c}i\ge 1\\{} j=1,\dots ,n+d\end{array}}{\sup }\mathbb{E}|{\delta _{ij}}{|}^{2r}& <\infty ,\\{} {\sum \limits_{m={m_{0}}}^{\infty }}{\bigg(\frac{\sqrt{m}}{{\lambda _{\min }}({A_{0}^{\top }}{A_{0}})}\bigg)}^{r}& <\infty .\end{aligned}\]

Then $\widehat{X}\to {X_{0}}$ as $m\to \infty $, almost surely.

In the next theorem strong consistency is obtained for $r<2$.

Theorem 3.7 (Shklyar [18], Theorem 4.3).

Let for some r ($1\le r\le 2$) and ${m_{0}}\ge 1$,

\[ \underset{\begin{array}{c}i\ge 1\\{} j=1,\dots ,n+d\end{array}}{\sup }\mathbb{E}|{\delta _{ij}}{|}^{2r}<\infty ,\hspace{2em}{\sum \limits_{m={m_{0}}}^{\infty }}\frac{1}{{\lambda _{\min }^{r}}({A_{0}^{\top }}{A_{0}})}<\infty .\]

Then $\widehat{X}\to {X_{0}}$ as $m\to \infty $, almost surely.

4 Existence and uniqueness of the estimator

When we speak of sequence $\{{A_{m}},\hspace{0.2778em}m\ge 1\}$ of random events parametrized by sample size m, we say that a random event occurs with high probability if the probability of the event tends to 1 as $m\to \infty $, and we say that a random event occurs eventually if almost surely there exists ${m_{0}}$ such that the random event occurs whenever $m>{m_{0}}$, that is $\mathbb{P}(\underset{m\to \infty }{\liminf }{A_{m}})=1$. (In this definition, ${A_{m}}$ are random events. Elsewhere in this paper, ${A_{m}}$ are matrices.)

Theorem 4.1.

Theorem 4.2.

-

2. Under the conditions of Theorem 3.5, the following random event occurs with high probability: for any Δ that is a solution to (11), equation (9) has a solution $\widehat{X}$. (Equation (9) might have multiple solutions.) The solution is a consistent estimator of ${X_{0}}$, i.e., $\widehat{X}\to {X_{0}}$ in probability.

5 Sketch of the proof of Theorems 3.5–3.7

Denote

Under the conditions of any of the consistency theorems in Section 3 there is a convergence ${\lambda _{\min }}({A_{0}^{\top }}{A_{0}^{}})\to \infty $. Hence the matrix N is nonsingular for m large enough. The matrix N is used as the denominator in the law of large numbers. Also, it is used for rescaling the problem: the condition number of ${N}^{-1/2}{C_{0}^{\top }}{C_{0}^{}}{N}^{-1/2}$ equals 2 at most.

The proofs of consistency theorems differ one from another, but they have the same structure and common parts. First, the law of large numbers

holds either in probability or almost surely, which depends on the theorem being proved. The proof varies for different theorems.

The inequalities (54) and (57) imply that whenever convergence (13) occurs, the sine between vectors ${\widehat{X}_{\mathrm{ext}}}$ and ${X_{\mathrm{ext}}^{0}}$ (in the univariate regression) or the largest of sines of canonical values between column spans of matrices ${\widehat{X}_{\mathrm{ext}}}$ and ${X_{\mathrm{ext}}^{0}}$ tends to 0 as the sample size m increases:

To prove (14), we use some algebra, the fact that ${X_{\mathrm{ext}}^{0}}$ (in the univariate model) or the columns of ${X_{\mathrm{ext}}^{0}}$ (in the multivariate model) are the minimum-eigenvalue eigenvectors of matrix N (see ineq. (52)), and eigenvector perturbation theorems – Lemma 6.5 or Lemma 6.6.

(14)

\[ \big\| \sin \angle ({\widehat{X}_{\mathrm{ext}}},{X_{\mathrm{ext}}^{0}})\big\| \le \big\| \sin \angle \big({N}^{1/2}{\widehat{X}_{\mathrm{ext}}},{N}^{1/2}{X_{\mathrm{ext}}^{0}}\big)\big\| \to 0.\]Then, by Theorem 8.3 we conclude that

6 Relevant classical results

We use some classical results. However, we state them in a form convenient for our study and provide the proof for some of them.

6.1 Generalized eigenvectors and eigenvalues

In this paper we deal with real matrices. Most theorems in this section can be generalized for matrices with complex entries by requiring that matrices be Hermitian rather than symmetric, and by complex conjugating where it is necessary.

Theorem 6.1 (Simultaneous diagonalization of a definite matrix pair).

Let A and B be $n\times n$ symmetric matrices such that for some α and β the matrix $\alpha A+\beta B$ is positive definite. Then there exist a nonsingular matrix T and diagonal matrices Λ and M such that

If in the decomposition $T=[{u_{1}},{u_{2}},\dots ,{u_{n}}]$, $\varLambda =\operatorname{diag}({\lambda _{1}},\dots ,{\lambda _{n}})$, $\mathrm{M}=\operatorname{diag}({\mu _{1}},\dots ,{\mu _{n}})$, then the numbers ${\lambda _{i}}/{\mu _{i}}\in \mathbb{R}\cup \{\infty \}$ are called generalized eigenvalues, and the columns ${u_{i}}$ of the matrix T are called the right generalized eigenvectors of the matrix pencil $\langle A,B\rangle $ because the following relation holds true:

Theorem 6.1 is well known; see Theorem IV.3.5 in [19, page 318]. The conditions of Theorem 6.1 can be changed as follows:

In Theorem 6.1 ${\lambda _{i}}$ and ${\mu _{i}}$ cannot be equal to 0 for the same i, while in Theorem 6.2 they can. On the other hand, in Theorem 6.1 ${\lambda _{i}}$ and ${\mu _{i}}$ can be any real numbers, while in Theorem 6.2 ${\lambda _{i}}\ge 0$ and ${\mu _{i}}\ge 0$. Theorem 6.2 is proved in [15].

Remark 6.2-1.

If the matrices A and B are symmetric and positive semidefinite, then

where

is the determinantal rank of the matrix pencil $\langle A,B\rangle $. (For square $n\times n$ matrices A and B, the determinantal rank characterizes if the matrix pencil is regular or singular. The matrix pencil $\langle A,B\rangle $ is regular if $\operatorname{rk}\langle A,B\rangle =n$, and singular if $\operatorname{rk}\langle A,B\rangle <n$.)

The inequality $\operatorname{rk}\langle A,B\rangle \ge \operatorname{rk}(A+B)$ follows from the definition of the determinantal rank. For all $k\in \mathbb{R}$ and for all such vectors x that $(A+B)x=0$ we have ${x}^{\top }Ax+{x}^{\top }Bx=0$, and because of positive semidefiniteness of matrices A and B, ${x}^{\top }Ax\ge 0$ and ${x}^{\top }Bx\ge 0$. Thus, ${x}^{\top }Ax={x}^{\top }Bx=0$. Again, due to positive semidefiniteness of A and B, $Ax=Bx=0$ and $(A+kB)x=0$. Thus, for all $k\in \mathbb{R}$

\[\begin{aligned}{}\big\{x:(A+B)x=0\big\}& \subset \big\{x:(A+kB)x=0\big\},\\{} \operatorname{rk}(A+B)& \ge \operatorname{rk}(A+kB),\\{} \operatorname{rk}\langle A,B\rangle =\underset{k}{\max }\operatorname{rk}(A+kB)& \le \operatorname{rk}(A+B),\end{aligned}\]

and (17) is proved.Remark 6.2-2.

Let A and B be positive semidefinite matrices of the same size such that $\operatorname{rk}(A+B)=\operatorname{rk}(B)$. The representation (16) might be not unique. But there exists a representation (16) such that

\[\begin{aligned}{}{\lambda _{i}}& ={\mu _{i}}=0\hspace{1em}\text{if}\hspace{1em}i=1,\dots ,\operatorname{def}(B),\\{} {\mu _{i}}& >0\hspace{1em}\text{if}\hspace{1em}i=\operatorname{def}(B)+1,\dots ,n,\\{} T& =\big[\hspace{-0.1667em}\underset{n\times \operatorname{def}(B)}{{T_{1}}}\hspace{0.1667em}\hspace{0.1667em}\underset{n\times \operatorname{rk}(B)}{{T_{2}}}\hspace{-0.1667em}\big],\\{} {T_{1}^{\top }}{T_{2}^{}}& =0.\end{aligned}\]

(Here if the matrix B is nonsingular, then ${T_{1}}$ is $n\times 0$ empty matrix; if $B=0$, then ${T_{2}}$ is $n\times 0$ matrix. In these marginal cases, ${T_{1}^{\top }}{T_{2}}$ is an empty matrix and is considered to be zero matrix.) The desired representation can be obtained from [2] for $S=0$ (in de Leeuw’s notation). This representation is constructed as follows. Let the columns of matrix ${T_{1}}$ make the orthogonal normalized basis of $\operatorname{Ker}(B)=\{v:Bv=0\}$. There exists $n\times \operatorname{rk}(B)$ matrix F such that $B=F{F}^{\top }$. Let the columns of matrix L be the orthogonal normalized eigenvectors of the matrix ${F}^{\dagger }A{({F}^{\dagger })}^{\top }$. Then set ${T_{2}}={({F}^{\dagger })}^{\top }L$. Note that the notation S, F and L is borrowed from [2], and is used only once. Elsewhere in the paper, the matrix F will have a different meaning.Proposition 6.3.

Proof.

Let us verify the Moore–Penrose conditions:

and the fact that the matrices ${({T}^{-1})}^{\top }\text{M}{T}^{-1}\hspace{0.1667em}T{\text{M}}^{\dagger }{T}^{\top }$ and $T{\text{M}}^{\dagger }{T}^{\top }\times {({T}^{-1})}^{\top }\text{M}{T}^{-1}$ are symmetric. The equalities $\text{(18)}$ and $\text{(19)}$ can be verified directly; and the symmetry properties can be reduced to the equality

with ${P_{\mathrm{M}}}=\mathrm{M}{\mathrm{M}}^{\dagger }=\operatorname{diag}(\underset{\operatorname{def}(B)}{\underbrace{0,\dots ,0}},\underset{\operatorname{rk}(B)}{\underbrace{1,\dots ,1}})$.

Since ${T_{1}^{\top }}{T_{2}^{}}=0$, ${T}^{\top }{T}^{}$ is a block diagonal matrix. Hence ${P_{\mathrm{M}}}{T}^{\top }T={T}^{\top }{T}^{}{P_{\mathrm{M}}}$, whence (20) follows. □

6.2 Angle between two linear subspaces

Let ${V_{1}}$ and ${V_{2}}$ be linear subspaces of ${\mathbb{R}}^{n}$, with $\dim {V_{1}}={k_{1}}\le \dim {V_{2}}={k_{2}}$. Then there exists an orthogonal $n\times n$ matrix U such that

Here rectangular diagonal matrices are allowed. If in (21) there are more cosines than sines (i.e., if ${k_{2}}+{k_{1}}>n$), then the excessive cosines should be equal to 1, so the columns of the bidiagonal matrix in (21) are unit vectors (which are orthogonal to each other). Here the columns of U are the vectors of some convenient “new” basis in ${\mathbb{R}}^{n}$, so U is a transitional matrix from the standard basis to “new” basis; the columns of matrix products in $\operatorname{span}\langle \cdots \hspace{0.1667em}\rangle $ in (21) and (22) are the vectors of the bases of subspaces ${V_{1}}$ and ${V_{2}}$; the bidiagonal matrix in (21) and the diagonal matrix in (22) are the transitional matrices from “new” basis in ${\mathbb{R}}^{n}$ to the bases in ${V_{1}}$ and ${V_{2}}$, respectively.

(21)

\[\begin{aligned}{}{V_{1}}& =\operatorname{span}\left\langle U\left(\begin{array}{c}{\operatorname{diag}_{{k_{2}}\times {k_{1}}}}(\cos {\theta _{i}},\hspace{0.2778em}i=1,\dots ,{k_{1}})\\{} {\operatorname{diag}_{(n-{k_{2}})\times {k_{1}}}}(\sin {\theta _{i}},\hspace{0.2778em}i=1,\dots ,\min (n-{k_{2}},\hspace{0.2222em}{k_{1}}))\end{array}\right)\right\rangle ,\end{aligned}\]The angles ${\theta _{k}}$ are called the canonical angles between ${V_{1}}$ and ${V_{2}}$. They can be selected so that $0\le {\theta _{k}}\le \frac{1}{2}\pi $ (to achieve this, we might have to reverse some vectors of the bases).

Denote ${P_{{V_{1}}}}$ the matrix of the orthogonal projector onto ${V_{1}}$. The singular values of the matrix ${P_{{V_{1}}}}(I-{P_{{V_{2}}}})$ are equal to $\sin {\theta _{k}}$ ($k=1,\dots ,{k_{1}}$); besides them, there is a singular value 0 of multiplicity $n-{k_{1}}$.

Denote the greatest of the sines of the canonical eigenvalues

If $\dim {V_{1}}=1$, ${V_{1}}=\operatorname{span}\langle v\rangle $, then

\[ \sin \angle (v,{V_{2}})=\bigg\| (I-{P_{{V_{2}}}})\frac{v}{\| v\| }\bigg\| =\operatorname{dist}\bigg(\frac{1}{\| v\| }v,{V_{2}}\bigg).\]

This can be generalized for $\dim {V_{1}}\ge 1$:

\[ \big\| \sin \angle ({V_{1}},{V_{2}})\big\| =\underset{v\in {V_{1}}\setminus \{0\}}{\max }\bigg\| (I-{P_{{V_{2}}}})\frac{v}{\| v\| }\bigg\| ,\]

whence

(24)

\[\begin{aligned}{}{\big\| \sin \angle ({V_{1}},{V_{2}})\big\| }^{2}& =\underset{v\in {V_{1}}\setminus \{0\}}{\max }\frac{{v}^{\top }(I-{P_{{V_{2}}}})v}{\| v{\| }^{2}},\\{} 1-{\big\| \sin \angle ({V_{1}},{V_{2}})\big\| }^{2}& =\underset{v\in {V_{1}}\setminus \{0\}}{\min }\frac{{v}^{\top }{P_{{V_{2}}}}v}{\| v{\| }^{2}}.\end{aligned}\]If $\dim {V_{1}}=\dim {V_{2}}$, then $\| \sin \angle ({V_{1}},{V_{2}})\| =\| {P_{{V_{1}}}}-{P_{{V_{2}}}}\| $, and therefore $\| \sin \angle ({V_{1}},{V_{2}})\| =\| \sin \angle ({V_{2}},{V_{1}})\| $. Otherwise the right-hand side of (23) may change if ${V_{1}}$ and ${V_{2}}$ are swapped (particularly, if $\dim {V_{1}}<\dim {V_{2}}$, then $\| {P_{{V_{1}}}}(I-{P_{{V_{2}}}})\| $ may or may not be equal to 1, but always $\| {P_{{V_{2}}}}(I-{P_{{V_{1}}}})\| =1$; see the proof of Lemma 8.2 in the appendix).

We will often omit “span” in arguments of sine. Thus, for n-row matrices ${X_{1}}$ and ${X_{2}}$, $\| \sin \angle ({X_{1}},{V_{2}})\| =\| \sin \angle (\operatorname{span}\langle {X_{1}}\rangle ,{V_{2}})\| $ and $\| \sin \angle ({X_{1}},{X_{2}})\| =\| \sin \angle (\operatorname{span}\langle {X_{1}}\rangle ,\operatorname{span}\langle {X_{2}}\rangle )\| $.

Lemma 6.4.

Let ${V_{11}}$, ${V_{2}}$ and ${V_{13}}$ be three linear subspaces in ${\mathbb{R}}^{n}$, with $\dim {V_{11}}={d_{1}}<\dim {V_{2}}={d_{2}}<\dim {V_{13}}={d_{3}}$ and ${V_{11}}\subset {V_{13}}$. Then there exists such a linear subspace ${V_{12}}\subset {\mathbb{R}}^{n}$ that ${V_{11}}\subset {V_{12}}\subset {V_{13}}$, $\dim {V_{12}}={d_{2}}$, and $\| \sin \angle ({V_{12}},{V_{2}})\| =1$.

Proof.

Since $\dim {V_{13}}+\dim {V_{2}^{\perp }}={d_{3}}+n-{d_{2}}>n$, there exists a vector $v\ne 0$, $v\in {V_{13}}\cap {V_{2}^{\perp }}$. Since $\max ({d_{1}},1)\le \dim \operatorname{span}\langle {V_{11}},v\rangle \le {d_{1}}+1$, it holds that

Therefore, there exists a ${d_{2}}$-dimensional subspace ${V_{12}}$ such that $\operatorname{span}\langle {V_{11}},v\rangle \hspace{0.1667em}\subset \hspace{0.1667em}{V_{12}}\subset {V_{13}}$. Then ${V_{11}}\subset {V_{12}}\subset {V_{13}}$ and $v\in {V_{12}}\cap {V_{2}^{\perp }}$. Hence ${P_{{V_{12}}}}(I-{P_{{V_{2}}}})v=v$, $\| {P_{{V_{12}}}}(I-{P_{{V_{2}}}})\| \ge 1$, and due to equation (23), $\| \sin \angle ({V_{12}},\hspace{0.1667em}{V_{2}})\| =1$. Thus, the subspace ${V_{12}}$ has the desired properties. □

6.3 Perturbation of eigenvectors and invariant spaces

Lemma 6.5.

Let A, B, $\tilde{A}$ be symmetric matrices, ${\lambda _{\min }}(A)=0$, ${\lambda _{2}}(A)>0$ and ${\lambda _{\min }}(B)\ge 0$. Let $A{x_{0}}=0$ and $B{x_{0}}\ne 0$ (so ${x_{0}}$ is an eigenvector of the matrix A that corresponds to the minimum eigenvalue). Let minimum of the function

be attained at the point ${x_{\ast }}$. Then

Remark 6.5-1.

The function $f(x)$ may or may not attain the minimum. Thus the condition $f({x_{\ast }})={\min _{{x}^{\top }Bx>0}}f(x)$ sometimes cannot be satisfied. But the theorem is still true if

and ${x_{\ast }}\ne 0$.

(25)

\[ \underset{x\to {x_{\ast }}}{\liminf }f(x)=\underset{x:\hspace{0.2778em}{x}^{\top }\hspace{-0.1667em}Bx>0}{\inf }f(x)\]Now proclaim the multivariate generalization of Lemma 6.5. We will not generalize Remark 6.5-1. Instead, we will check that the minimum is attained when we use Lemma 6.6 (see Proposition 7.10).

Lemma 6.6.

Let A, B, $\tilde{A}$ be $n\times n$ symmetric matrices, ${\lambda _{i}}(A)=0$ for all $i=1,\dots ,d$, ${\lambda _{d+1}}(A)>0$, ${\lambda _{\min }}(B)\ge 0$. Let ${X_{0}}$ be $n\times d$ matrix such that $A{X_{0}}=0$ and the matrix ${X_{0}^{\top }}B{X_{0}^{}}$ is nonsingular. Let the functional

attain its minimum. Then for any point X where the minimum is attained,

(26)

\[\begin{aligned}{}f(X)& ={\lambda _{\max }}\big({\big({X}^{\top }BX\big)}^{-1}{X}^{\top }(A+\tilde{A})X\big)\hspace{1em}\textit{if}\hspace{2.5pt}X\in {\mathbb{R}}^{n\times d}\hspace{2.5pt}\textit{and}\hspace{2.5pt}{X}^{\top }BX>0\textit{,}\\{} f(X)& \hspace{0.2778em}\textit{is not defined otherwise,}\end{aligned}\]6.4 Rosenthal inequality

In the following theorems, a random variable ξ is called centered if $\mathbb{E}\xi =0$.

Theorem 6.7.

Let $\nu \ge 2$ be a nonrandom real number. Then there exist $\alpha \ge 0$ and $\beta \ge 0$ such that for any set of centered mutually independent random variables $\{{\xi _{i}},i=1,\dots ,m\}$, $m\ge 1$, the following inequality holds true:

Theorem 6.7 is well known; see [16, Theorem 2.9, page 59].

Theorem 6.8.

Let ν be a nonrandom real number, $1\le \nu \le 2$. Then there exists $\alpha \ge 0$ such that for any set of centered mutually independent random variables $\{{\xi _{i}},i=1,\dots ,m\}$, $m\ge 1$, the inequality holds true:

Proof.

The desired inequality is trivial for $\nu =1$. For all $1<\nu \le 2$ it is a consequence of the Marcinkiewicz–Zygmund inequality

\[ \mathbb{E}\Bigg[{\Bigg|{\sum \limits_{i=1}^{m}}{\xi _{i}}\Bigg|}^{\nu }\Bigg]\le \alpha \mathbb{E}\Bigg[{\Bigg({\sum \limits_{i=1}^{m}}{\xi _{i}^{2}}\Bigg)}^{\nu /2}\Bigg]\le \alpha \mathbb{E}{\sum \limits_{i=1}^{m}}|{\xi _{i}}{|}^{\nu }=\alpha {\sum \limits_{i=1}^{m}}\mathbb{E}|{\xi _{i}}{|}^{\nu }.\]

Here the first inequality is due to Marcinkiewicz and Zygmund [11, Theorem 13]. The second inequality follows from the fact that for $\nu \le 2$,

□7 Generalized eigenvalue problem for positive semidefinite matrices

In this section we explain the relationship between the TLS estimator and the generalized eigenvalue problem. The results of this section are important for constructing the TLS estimator. Proposition 7.9 is used to state the uniqueness of the TLS estimator.

Lemma 7.1.

Let A and B be $n\times n$ symmetric positive semidefinite matrices, with simultaneous diagonalization

i.e., ${\nu _{i}}$ is the smallest number $\lambda \ge 0$, such that there exists an i-dimensional subspace $V\subset {\mathbb{R}}^{n}$, such that the quadratic form $A-\lambda B$ is negative semidefinite on V.

\[ A={\big({T}^{-1}\big)}^{\top }\varLambda {T}^{-1},\hspace{2em}B={\big({T}^{-1}\big)}^{\top }\mathrm{M}{T}^{-1},\]

with

\[ \varLambda =\operatorname{diag}({\lambda _{1}},\dots ,{\lambda _{n}}),\hspace{2em}\mathrm{M}=\operatorname{diag}({\mu _{1}},\dots ,{\mu _{n}})\]

(see Theorem 6.2 for its existence). For $i=1,\dots ,n$ denote

\[ {\nu _{i}}=\left\{\begin{array}{l@{\hskip10.0pt}l}{\lambda _{i}}/{\mu _{i}}\hspace{1em}& \textit{if}\hspace{2.5pt}{\mu _{i}}>0\textit{,}\\{} 0\hspace{1em}& \textit{if}\hspace{2.5pt}{\lambda _{i}}=0\textit{,}\\{} +\infty \hspace{1em}& \textit{if}\hspace{2.5pt}{\lambda _{i}}>0\textit{,}\hspace{2.5pt}{\mu _{i}}=0\textit{.}\end{array}\right.\]

Assume that ${\nu _{1}}\le {\nu _{2}}\le \cdots \le {\nu _{n}}$. Then

(27)

\[ {\nu _{i}}=\min \big\{\lambda \ge 0|\textit{``}\exists V,\hspace{2.5pt}\dim V=i:(A-\lambda B){|_{V}}\le 0\textit{''}\big\},\]Remark 7.1-2.

Let ${\nu _{i}}<\infty $. The minimum in (27) is attained for V being the linear span of first i columns of the matrix T (i.e., the linear span of the eigenvectors of the matrix pencil $\langle A,B\rangle $ that correspond to the i smallest generalized eigenvalues). That is

In Propositions 7.2–7.5 the following optimization problem is considered. For a fixed $(n+d)\times d$ matrix X find an $m\times (n+d)$ matrix Δ where the constrained minimum is attained:

Here the matrix X is assumed to be of full rank:

Proposition 7.2.

1. The constraints in (28) are compatible if and only if

Here $\operatorname{span}\langle M\rangle $ is a column space of the matrix M.

(30)

\[ \operatorname{span}\big\langle {X}^{\top }{C}^{\top }\big\rangle \subset \operatorname{span}\big\langle {X}^{\top }\varSigma \big\rangle .\]

2. Let the constraints in (28) be compatible. Then the least element of the partially ordered set (in the Loewner order) $\{\Delta {\varSigma }^{\dagger }{\Delta }^{\top }:\Delta \hspace{0.1667em}(I-{P_{\varSigma }})=0\hspace{0.2778em}\textit{and}\hspace{0.2778em}(C-\Delta )X=0\}$ is attained for $\Delta =CX{({X}^{\top }\varSigma X)}^{\dagger }{X}^{\top }\varSigma $ and is equal to $CX{({X}^{\top }\varSigma X)}^{\dagger }{X}^{\top }{C}^{\top }$. This means the following:

2a. For $\Delta =CX{({X}^{\top }\varSigma X)}^{\dagger }{X}^{\top }\varSigma $, it holds that

Remark 7.2-1.

If the constraints are compatible, the least element (and the unique minimum) is attained at a single point. Namely, the equalities

\[\begin{aligned}{}\Delta \hspace{0.1667em}(I-{P_{\varSigma }})& =0,\hspace{2em}(C-\Delta )X=0,\\{} \Delta {\varSigma }^{\dagger }{\Delta }^{\top }& =CX{\big({X}^{\top }\varSigma X\big)}^{\dagger }{X}^{\top }{C}^{\top }\end{aligned}\]

imply $\Delta =CX{({X}^{\top }\varSigma X)}^{\dagger }{X}^{\top }\varSigma $.Proposition 7.3.

Let the matrix pencil $\langle {C}^{\top }C,\varSigma \rangle $ be definite and (29) hold. The constraints in (28) are compatible if and only if the matrix ${X}^{\top }\varSigma X$ is nonsingular. Then Proposition 7.2 still holds true if ${({X}^{\top }\varSigma X)}^{-1}$ is substituted for ${({X}^{\top }\varSigma X)}^{\dagger }$.

Proposition 7.4.

Let X be an $(n+d)\times d$ matrix which satisfies (29) and makes the constraints in (28) compatible. Then for $k=1,2,\dots ,d$,

(34)

\[\begin{aligned}{}& \underset{\begin{array}{c}\Delta (I-{P_{\varSigma }})=0\\{} (C-\Delta )X=0\end{array}}{\min }{\lambda _{k+m-d}}\big(\Delta {\varSigma }^{\dagger }{\Delta }^{\top }\big)\\{} & \hspace{1em}=\min \big\{\lambda \ge 0:\textit{``}\exists V\subset \operatorname{span}\langle X\rangle ,\hspace{0.2778em}\dim V=k:\big({C}^{\top }C-\lambda \varSigma \big){|_{V}}\le 0\textit{''}\big\}.\end{aligned}\]Remark 7.4-1.

In the left-hand side of (34) the minima are attained for the same $\Delta =CX{({X}^{\top }\varSigma X)}^{\dagger }{X}^{\top }\varSigma $ for all k (the k sets where the minima are attained have non-empty intersection; we will show that the intersection comprises of a single element).

One can choose a stack of subspaces

such that ${V_{k}}$ is the element where the minimum in the right-hand side of (34) is attained, i.e., for all $k=1,\dots ,d$,

\[ \dim {V_{k}}=k,\hspace{2em}{V_{k}}\subset \operatorname{span}\langle X\rangle ,\hspace{2em}\big({C}^{\top }C-{\nu _{k}}\varSigma \big){|_{{V_{k}}}}\le 0,\]

with ${\nu _{k}}={\min _{\begin{array}{c}\Delta (I-{P_{\varSigma }})=0\\{} (C-\Delta )X=0\end{array}}}{\lambda _{k+m-d}}(\Delta {\varSigma }^{\dagger }{\Delta }^{\top })$.In Propositions 7.5 to 7.9, we will use notation from simultaneous diagonalization of matrices ${C}^{\top }C$ and Σ:

where

(35)

\[ {C}^{\top }C={\big({T}^{-1}\big)}^{\top }\varLambda {T}^{-1},\hspace{2em}\varSigma ={\big({T}^{-1}\big)}^{\top }\mathrm{M}{T}^{-1},\]

\[\begin{aligned}{}\varLambda & =\operatorname{diag}({\lambda _{1}},\dots ,{\lambda _{n+d}}),\hspace{2em}\mathrm{M}=\operatorname{diag}({\mu _{1}},\dots ,{\mu _{n+d}}),\\{} T& =[{u_{1}},{u_{2}},\dots ,{u_{d}},\dots ,{u_{n+d}}].\end{aligned}\]

If Remark 6.2-2 is applicable, let the simultaneous diagonalization be constructed accordingly. For $k=1,\dots ,n+d$ denote

\[ {\nu _{i}}=\left\{\begin{array}{l@{\hskip10.0pt}l}{\lambda _{k}}/{\mu _{k}}\hspace{1em}& \text{if}\hspace{2.5pt}{\mu _{k}}>0\text{,}\\{} 0\hspace{1em}& \text{if}\hspace{2.5pt}{\lambda _{k}}=0\text{,}\\{} +\infty \hspace{1em}& \text{if}\hspace{2.5pt}{\lambda _{k}}>0\text{,}\hspace{2.5pt}{\mu _{k}}=0\text{.}\end{array}\right.\]

Let ${\nu _{k}}$ be arranged in ascending order.Proposition 7.5.

Let X be an $(n+d)\times d$ matrix which satisfies (29) and makes constraints in (28) compatible. Then

If ${\nu _{d}}<\infty $, then for $X=[{u_{1}},{u_{2}},\dots ,{u_{d}}]$ the inequality in (36) becomes an equality.

Proposition 7.7.

Let $\| M{\| _{\mathrm{U}}}$ be an arbitrary unitarily invariant norm on $m\times n$ matrices. Singular values of the matrix M are arranged in descending order and denoted ${\sigma _{i}}(M)$:

Let ${M_{1}}$ and ${M_{2}}$ be $m\times n$ matrices. Then

-

1. If ${\sigma _{i}}({M_{1}})\le {\sigma _{i}}({M_{2}})$ for all $i=1,\dots ,\min (m,n)$, then $\| {M_{1}}{\| _{\mathrm{U}}}\le \| {M_{2}}{\| _{\mathrm{U}}}$.

-

2. If ${\sigma _{1}}({M_{1}})<{\sigma _{1}}({M_{2}})$ and ${\sigma _{i}}({M_{1}})\le {\sigma _{i}}({M_{2}})$ for all $i=2,\dots ,\min (m,n)$, then $\| {M_{1}}{\| _{\mathrm{U}}}<\| {M_{2}}{\| _{\mathrm{U}}}$.

Proposition 7.9.

As a consequence, if ${\nu _{d}}<{\nu _{d+1}}$, then (7) and (8) unambiguously determine $\operatorname{span}\langle {\widehat{X}_{\mathrm{ext}}}\rangle $ of rank d.

Proposition 7.10.

Let $\langle {C}^{\top }C,\varSigma \rangle $ be a definite matrix pencil. Then for any Δ where the minimum in (11) is attained, the corresponding solution ${\widehat{X}_{\mathrm{ext}}}$ of the linear equations (8) (such that $\operatorname{rk}{\widehat{X}_{\mathrm{ext}}}=d$) is a point where the minimum of the functional

is attained. It is also a point where the minimum of

is attained.

8 Appendix: Proofs

8.1 Bounds for eigenvalues of some matrices used in the proof

8.1.1 Eigenvalues of the matrix ${C_{0}^{\top }}{C_{0}^{}}$

The $(n+d)\times (n+d)$ matrix ${C_{0}^{\top }}{C_{0}^{}}$ is symmetric and positive semidefinite. Since ${C_{0}}{X_{\mathrm{ext}}^{0}}={A_{0}}{X_{0}}-{B_{0}}=0$, the matrix ${C_{0}^{\top }}{C_{0}^{}}$ is rank deficient with eigenvalue 0 of multiplicity at least d. As ${A_{0}^{\top }}{A_{0}^{}}$ is a $n\times n$ principal submatrix of ${C_{0}^{\top }}{C_{0}^{}}$,

by the Cauchy interlacing theorem (Theorem IV.4.2 from [19] used d times).

(40)

\[ {\lambda _{d+1}}\big({C_{0}^{\top }}{C_{0}}\big)\ge {\lambda _{\min }}\big({A_{0}^{\top }}{A_{0}}\big)\]Due to inequality (40), if the matrix ${A_{0}^{\top }}{A_{0}}$ is nonsingular, then ${\lambda _{n+1}}({C_{0}^{\top }}{C_{0}})>0$, whence $\operatorname{rk}({C_{0}^{\top }}{C_{0}})=d$. If the conditions of Theorem 3.5, 3.6 or 3.7 hold true, then ${\lambda _{\min }}({A_{0}^{\top }}{A_{0}})\to \infty $, and thus

\[ {\lambda _{d+1}}\big({C_{0}^{\top }}{C_{0}}\big)\ge {\lambda _{\min }}\big({A_{0}^{\top }}{A_{0}}\big)>0\]

for m large enough. Proposition 8.1.

Proof.

1. If the matrix Σ is nonsingular, then Proposition 8.1 is obvious. Due to condition (6), $\operatorname{rk}\varSigma \ge d$ (see Remark 2.1), whence $\varSigma \ne 0$. In what follows, assume that Σ is a singular but non-zero matrix. Let $F=(\begin{array}{c}{F_{1}}\\{} {F_{2}}\end{array})$ be a $(n+d)\times (n+d-\operatorname{rk}(\varSigma ))$ matrix whose columns make the basis of the null-space $\operatorname{Ker}(\varSigma )=\{x:\varSigma x=0\}$ of the matrix Σ.

2. Now prove that columns of the matrix $[{I_{n}}\hspace{0.2778em}{X_{0}}]\hspace{0.2222em}F$ are linearly independent. Assume the contrary. Then for some $v\in {\mathbb{R}}^{n+d-\operatorname{rk}(\varSigma )}\setminus \{0\}$,

Furthermore, $Fv\ne 0$ because $v\ne 0$ and the columns of F are linearly independent. Hence, by (41), ${F_{2}}v\ne 0$.

Equality (42) implies that the columns of the matrix $\varSigma {X_{\mathrm{ext}}^{0}}$ are linearly dependent, and this contradicts condition (6). The contradiction means that columns of the matrix $[I\hspace{0.2778em}{X_{\mathrm{ext}}^{0}}]\hspace{0.2222em}F$ are linearly independent.

3. If the conditions of either Theorem 3.5, 3.6, or 3.7 hold true, then the matrix ${A_{0}^{\top }}{A_{0}}$ is positive definite for m large enough.

4. Under conditions (4) and (5), $\tilde{C}F=0$ almost surely. Indeed, $\mathbb{E}{\tilde{c}_{i}}=0$ and $\operatorname{var}[{\tilde{c}_{i}}F]={F}^{\top }\varSigma F=0$, $i=1,2,\dots ,m$.

5. It remains to prove the implication:

As the matrix ${A_{0}^{\top }}{A_{0}^{}}$ is nonsingular and columns of the matrix $[{I_{n}}\hspace{0.2778em}{X_{0}}]\hspace{0.2222em}F$ are linearly independent, the columns of the matrix ${A_{0}^{\top }}{A_{0}^{}}\hspace{0.2222em}[{I_{n}}\hspace{0.2778em}{X_{0}}]\hspace{0.2222em}F$ are linearly independent as well. Hence, (43) implies $v=0$, and so $x=Fv=0$.

\[ \text{if}\hspace{1em}{A_{0}^{\top }}{A_{0}^{}}>0\hspace{1em}\text{and}\hspace{1em}\tilde{C}F=0,\hspace{1em}\text{then}\hspace{1em}{C}^{\top }C+\varSigma >0.\]

The matrices ${C}^{\top }C$ and Σ are positive semidefinite. Suppose that ${x}^{\top }({C}^{\top }C+\varSigma )x=0$ and prove that $x=0$. Since ${x}^{\top }({C}^{\top }C+\varSigma )x=0$, $Cx=0$ and $\varSigma x=0$. The vector x belongs to the null-space of the matrix Σ. Therefore, $x=Fv$ for some vector $v\in {\mathbb{R}}^{n+d-\operatorname{rk}\varSigma }$. Then

(43)

\[\begin{aligned}{}0={A_{0}^{\top }}Cx& ={A_{0}}({C_{0}}+\tilde{C})x\\{} & ={A_{0}}{C_{0}}Fv+{A_{0}}\tilde{C}Fv\\{} & ={A_{0}^{\top }}{A_{0}^{}}\hspace{0.2222em}[{I_{n}}\hspace{1em}{X_{0}}]\hspace{0.2222em}Fv+0.\end{aligned}\]We have proved that the equality ${x}^{\top }({C}^{\top }C+\varSigma )x=0$ implies $x=0$. Thus, the positive semidefinite matrix ${C}^{\top }C+\varSigma $ is nonsingular, and so positive definite. □

8.1.2 Eigenvalues and common eigenvectors of N and ${N}^{-\frac{1}{2}}{C_{0}^{\top }}{C_{0}^{}}{N}^{-\frac{1}{2}}$

The rank-deficient positive semidefinite symmetric matrix ${C_{0}^{\top }}{C_{0}}$ can be factorized as:

\[\begin{aligned}{}{C_{0}^{\top }}{C_{0}^{}}& =U\operatorname{diag}\big({\lambda _{\min }}\big({C_{0}^{\top }}{C_{0}}\big),{\lambda _{2}}\big({C_{0}^{\top }}{C_{0}}\big),\dots ,{\lambda _{n+d}}\big({C_{0}^{\top }}{C_{0}}\big)\big){U}^{\top }\\{} & =U\operatorname{diag}\big({\lambda _{j}}\big({C_{0}^{\top }}{C_{0}}\big);\hspace{0.2778em}j=1,\dots ,n+d\big){U}^{\top },\end{aligned}\]

with an orthogonal matrix U and

Then the eigendecomposition of the matrix $N={C_{0}^{\top }}{C_{0}}+{\lambda _{\min }}({A_{0}^{\top }}{A_{0}})I$ is

The matrix N is nonsingular as soon as ${A_{0}^{\top }}{A_{0}}$ is nonsingular. Hence, under the conditions of Theorem 3.5, 3.6, or 3.7, the matrix N is nonsingular for m large enough.

\[ N=U\operatorname{diag}\big({\lambda _{j}}\big({C_{0}^{\top }}{C_{0}}\big)+{\lambda _{\min }}\big({A_{0}^{\top }}{A_{0}}\big);\hspace{0.2778em}j=1,\dots ,n+d\big){U}^{\top }.\]

Notice that

(44)

\[ {\lambda _{\min }}(N)=\cdots ={\lambda _{d}}(N)={\lambda _{\min }}\big({A_{0}^{\top }}{A_{0}}\big).\]Since ${C_{0}}{X_{\mathrm{ext}}^{0}}=0$, it holds that

As soon as N is nonsingular, the matrices ${N}^{-1/2}$ and ${N}^{-1/2}{C_{0}^{\top }}{C_{0}}{N}^{-1/2}$ have the eigendecomposition

\[\begin{aligned}{}{N}^{-1/2}& =U\operatorname{diag}\bigg(\frac{1}{\sqrt{{\lambda _{j}}({C_{0}^{\top }}{C_{0}})\hspace{0.1667em}+\hspace{0.1667em}{\lambda _{\min }}({A_{0}^{\top }}{A_{0}})}};\hspace{0.2778em}j\hspace{0.1667em}=\hspace{0.1667em}1,\dots ,n\hspace{0.1667em}+\hspace{0.1667em}d\bigg){U}^{\top },\\{} {N}^{-1/2}{C_{0}^{\top }}{C_{0}}{N}^{-1/2}& =U\operatorname{diag}\bigg(\frac{{\lambda _{j}}({C_{0}^{\top }}{C_{0}})}{{\lambda _{j}}({C_{0}^{\top }}{C_{0}})+{\lambda _{\min }}({A_{0}^{\top }}{A_{0}})};\hspace{0.2778em}j=1,\dots ,n+d\bigg){U}^{\top }.\end{aligned}\]

Thus, the eigenvalues of ${N}^{-1/2}$ and ${N}^{-1/2}{C_{0}^{\top }}{C_{0}}{N}^{-1/2}$ satisfy the following:

As a result,

Because $\operatorname{tr}({C_{0}^{}}{N}^{-1}{C_{0}^{\top }})=\operatorname{tr}({C_{0}^{}}{N}^{-1/2}{N}^{-1/2}{C_{0}^{\top }})=\operatorname{tr}({N}^{-1/2}{C_{0}^{\top }}{C_{0}^{}}{N}^{-1/2})$,

These properties will be used in Sections 8.2 and 8.3.

(46)

\[\begin{aligned}{}\big\| {N}^{-1/2}\big\| ={\lambda _{\max }}\big({N}^{-1/2}\big)& =\frac{1}{\sqrt{{\lambda _{\min }}({A_{0}^{\top }}{A_{0}})}};\end{aligned}\](49)

\[ \frac{1}{2}n\le \operatorname{tr}\big({N}^{-1/2}{C_{0}^{\top }}{C_{0}^{}}{N}^{-1/2}\big)\le n.\]8.2 Use of eigenvector perturbation theorems

8.2.1 Univariate regression ($d=1$)

Remember inequalities (44) (whence (51) follows) and (45):

Then

(51)

\[\begin{array}{l}\displaystyle {\widehat{X}_{\mathrm{ext}}^{\top }}N{\widehat{X}_{\mathrm{ext}}}\ge {\lambda _{\min }}\big({A_{0}^{\top }}{A_{0}}\big){\widehat{X}_{\mathrm{ext}}^{\top }}{\widehat{X}_{\mathrm{ext}}};\\{} \displaystyle N{X_{\mathrm{ext}}^{0}}={\lambda _{\min }}\big({A_{0}^{\top }}{A_{0}}\big){X_{\mathrm{ext}}^{0}}.\end{array}\](52)

\[\begin{aligned}{}\frac{{({\widehat{X}_{\mathrm{ext}}^{\top }}{X_{\mathrm{ext}}^{0}})}^{2}}{{\widehat{X}_{\mathrm{ext}}^{\top }}{\widehat{X}_{\mathrm{ext}}}\cdot {X_{\mathrm{ext}}^{0\hspace{0.1667em}\top }}{X_{\mathrm{ext}}^{0}}}& \ge \frac{{({\widehat{X}_{\mathrm{ext}}^{\top }}N{X_{\mathrm{ext}}^{0}})}^{2}}{{\widehat{X}_{\mathrm{ext}}^{\top }}N{\widehat{X}_{\mathrm{ext}}}\cdot {X_{\mathrm{ext}}^{0\hspace{0.1667em}\top }}N{X_{\mathrm{ext}}^{0}}},\\{} {\cos }^{2}\angle \big({\widehat{X}_{\mathrm{ext}}},{X_{\mathrm{ext}}^{0}}\big)& \ge {\cos }^{2}\angle \big({N}^{1/2}{\widehat{X}_{\mathrm{ext}}},{N}^{1/2}{X_{\mathrm{ext}}^{0}}\big),\\{} {\sin }^{2}\angle \big({\widehat{X}_{\mathrm{ext}}},{X_{\mathrm{ext}}^{0}}\big)& \le {\sin }^{2}\angle \big({N}^{1/2}{\widehat{X}_{\mathrm{ext}}},{N}^{1/2}{X_{\mathrm{ext}}^{0}}\big).\end{aligned}\]Now, apply Lemma 6.5 on the perturbation bound for the minimum-eigenvalue eigenvector. The unperturbed symmetric matrix is ${N}^{-1/2}{C_{0}^{\top }}{C_{0}}{N}^{-1/2}$, satisfying

\[\begin{aligned}{}{\lambda _{\min }}\big({N}^{-1/2}{C_{0}^{\top }}{C_{0}}{N}^{-1/2}\big)& =0,\\{} {N}^{-1/2}{C_{0}^{\top }}{C_{0}}{N}^{-1/2}{N}^{1/2}{X_{\mathrm{ext}}^{0}}& =0,\\{} {\lambda _{2}}\big({N}^{-1/2}{C_{0}^{\top }}{C_{0}}{N}^{-1/2}\big)& \ge \frac{1}{2}.\end{aligned}\]

The null-vector of the unperturbed matrix is ${N}^{-1/2}{X_{\mathrm{ext}}^{0}}$.The column vector ${\widehat{X}_{\mathrm{ext}}}$ is a generalized eigenvector of the matrix pencil $\langle {C}^{\top }C,\varSigma \rangle $. Denote the corresponding eigenvalue by ${\lambda _{\min }}$. Thus,

\[ {C}^{\top }C{\widehat{X}_{\mathrm{ext}}}={\lambda _{\min }}\cdot \varSigma {\widehat{X}_{\mathrm{ext}}}.\]

The perturbed matrix is ${N}^{-1/2}({C}^{\top }C-m\varSigma ){N}^{-1/2}$; the minimum eigenvalue of the matrix pencil $\langle {N}^{-1/2}({C}^{\top }C-m\varSigma ){N}^{-1/2},\hspace{0.2778em}{N}^{-1/2}\varSigma {N}^{-1/2}\rangle $ is equal to ${\lambda _{\min }}-m$, and the eigenvector is ${N}^{1/2}{\widehat{X}_{\mathrm{ext}}}$:

We have to verify that ${N}^{-1/2}\varSigma {N}^{-1/2}{N}^{1/2}{X_{\mathrm{ext}}^{0}}\ne 0$; this follows from condition (6). Obviously, the matrix ${N}^{-1/2}\varSigma {N}^{-1/2}$ is positive semidefinite:

Denote

By Lemma 6.5

\[ {\sin }^{2}\angle \big({N}^{1/2}{\widehat{X}_{\mathrm{ext}}},{N}^{1/2}{X_{\mathrm{ext}}^{0}}\big)\le \frac{\epsilon }{0.5}\bigg(1+\frac{{X_{\mathrm{ext}}^{0\hspace{0.1667em}\top }}N{X_{\mathrm{ext}}^{0}}}{{X_{\mathrm{ext}}^{0\hspace{0.1667em}\top }}\varSigma {X_{\mathrm{ext}}^{0}}}\cdot \frac{{\widehat{X}_{\mathrm{ext}}^{\top }}\varSigma {\widehat{X}_{\mathrm{ext}}}}{{\widehat{X}_{\mathrm{ext}}^{\top }}N{\widehat{X}_{\mathrm{ext}}}}\bigg).\]

Use (45) and (51) again, and also use (52):

(54)

\[\begin{aligned}{}{\sin }^{2}\angle \big({\widehat{X}_{\mathrm{ext}}},{X_{\mathrm{ext}}^{0}}\big)& \le {\sin }^{2}\angle \big({N}^{1/2}{\widehat{X}_{\mathrm{ext}}},{N}^{1/2}{X_{\mathrm{ext}}^{0}}\big)\\{} & \le 2\epsilon \bigg(1+\frac{{X_{\mathrm{ext}}^{0\hspace{0.1667em}\top }}{X_{\mathrm{ext}}^{0}}}{{X_{\mathrm{ext}}^{0\hspace{0.1667em}\top }}\varSigma {X_{\mathrm{ext}}^{0}}}\cdot \frac{{\widehat{X}_{\mathrm{ext}}^{\top }}\varSigma {\widehat{X}_{\mathrm{ext}}}}{{\widehat{X}_{\mathrm{ext}}^{\top }}{\widehat{X}_{\mathrm{ext}}}}\bigg)\\{} & \le 2\epsilon \bigg(1+\frac{{X_{\mathrm{ext}}^{0\hspace{0.1667em}\top }}{X_{\mathrm{ext}}^{0}}\cdot \| \varSigma \| }{{X_{\mathrm{ext}}^{0\hspace{0.1667em}\top }}\varSigma {X_{\mathrm{ext}}^{0}}}\bigg).\end{aligned}\]8.2.2 Multivariate regression ($d\ge 1$)

What follows is valid for both univariate ($d=1$) and multivariate ($d>1$) regression.

Due to (44), $N\ge {\lambda _{\min }}({A_{0}^{\top }}{A_{0}})I$ in the Loewner order; thus inequality (51) holds in the Loewner order. Hence

\[\begin{aligned}{}\forall v\in {\mathbb{R}}^{d}\setminus \{0\}:\hspace{0.1667em}& \frac{{v}^{\top }{\widehat{X}_{\mathrm{ext}}^{\top }}{X_{\mathrm{ext}}^{0}}{({X_{\mathrm{ext}}^{0\hspace{0.1667em}\top }}{X_{\mathrm{ext}}^{0}})}^{-1}{X_{\mathrm{ext}}^{0\hspace{0.1667em}\top }}{\widehat{X}_{\mathrm{ext}}}v}{{v}^{\top }{\widehat{X}_{\mathrm{ext}}^{\top }}{\widehat{X}_{\mathrm{ext}}}v}\\{} & \hspace{1em}\ge {\lambda _{\min }}\big({A_{0}^{\top }}{A_{0}}\big)\frac{{v}^{\top }{\widehat{X}_{\mathrm{ext}}^{\top }}{X_{\mathrm{ext}}^{0}}{({X_{\mathrm{ext}}^{0\hspace{0.1667em}\top }}{X_{\mathrm{ext}}^{0}})}^{-1}{X_{\mathrm{ext}}^{0\hspace{0.1667em}\top }}{\widehat{X}_{\mathrm{ext}}}v}{{v}^{\top }{\widehat{X}_{\mathrm{ext}}^{\top }}N{\widehat{X}_{\mathrm{ext}}}v}.\end{aligned}\]

With inequality (45), we get

\[\begin{aligned}{}& \frac{{v}^{\top }{\widehat{X}_{\mathrm{ext}}^{\top }}{X_{\mathrm{ext}}^{0}}{({X_{\mathrm{ext}}^{0\hspace{0.1667em}\top }}{X_{\mathrm{ext}}^{0}})}^{-1}{X_{\mathrm{ext}}^{0\hspace{0.1667em}\top }}{\widehat{X}_{\mathrm{ext}}}v}{{v}^{\top }{\widehat{X}_{\mathrm{ext}}^{\top }}{\widehat{X}_{\mathrm{ext}}}v}\\{} & \hspace{1em}\ge \frac{{v}^{\top }N{\widehat{X}_{\mathrm{ext}}^{\top }}{X_{\mathrm{ext}}^{0}}{({X_{\mathrm{ext}}^{0\hspace{0.1667em}\top }}N{X_{\mathrm{ext}}^{0}})}^{-1}{X_{\mathrm{ext}}^{0\hspace{0.1667em}\top }}N{\widehat{X}_{\mathrm{ext}}}v}{{v}^{\top }{\widehat{X}_{\mathrm{ext}}^{\top }}N{\widehat{X}_{\mathrm{ext}}}v}.\end{aligned}\]

Using equation (24) to determine the sine and noticing that

\[\begin{aligned}{}{P_{{X_{\mathrm{ext}}^{0}}}}& ={X_{\mathrm{ext}}^{0}}{\big({X_{\mathrm{ext}}^{0\hspace{0.1667em}\top }}{X_{\mathrm{ext}}^{0}}\big)}^{-1}{X_{\mathrm{ext}}^{0\hspace{0.1667em}\top }},\\{} {P_{{N}^{1/2}{X_{\mathrm{ext}}^{0}}}}& ={N}^{1/2}{X_{\mathrm{ext}}^{0}}{\big({X_{\mathrm{ext}}^{0\hspace{0.1667em}\top }}N{X_{\mathrm{ext}}^{0}}\big)}^{-1}{X_{\mathrm{ext}}^{0\hspace{0.1667em}\top }}{N}^{1/2},\end{aligned}\]

we get

(55)

\[\begin{array}{l}\displaystyle 1-{\big\| \sin \angle \big({\widehat{X}_{\mathrm{ext}}},{X_{\mathrm{ext}}^{0}}\big)\big\| }^{2}\ge 1-{\big\| \sin \angle \big({N}^{1/2}{\widehat{X}_{\mathrm{ext}}},{N}^{1/2}{X_{\mathrm{ext}}^{0}}\big)\big\| }^{2},\\{} \displaystyle \big\| \sin \angle \big({\widehat{X}_{\mathrm{ext}}},{X_{\mathrm{ext}}^{0}}\big)\big\| \le \big\| \sin \angle \big({N}^{1/2}{\widehat{X}_{\mathrm{ext}}},{N}^{1/2}{X_{\mathrm{ext}}^{0}}\big)\big\| .\end{array}\]The TLS estimator ${\widehat{X}_{\mathrm{ext}}}$ is defined as a solution to the linear equations (8) for Δ that brings the minimum to (7). By Proposition 7.6, the same Δ brings the minimum to (11). By Proposition 7.10, the functions (38) and (39) attain their minima at the point ${\widehat{X}_{\mathrm{ext}}}$. Therefore, the minimum of the function

is attained for $M={N}^{1/2}{\widehat{X}_{\mathrm{ext}}}$.

(56)

\[ M\mapsto {\lambda _{\max }}\big({\big({M}^{\top }{N}^{-1/2}\varSigma {N}^{-1/2}M\big)}^{-1}{M}^{\top }{N}^{-1/2}\big({C}^{\top }C-m\varSigma \big){N}^{-1/2}M\big)\]Now, apply Lemma 6.6 on perturbation bounds for a generalized invariant subspace. The unperturbed matrix (denoted A in Lemma 6.6) is ${N}^{-1/2}{C_{0}^{\top }}{C_{0}}{N}^{-1/2}$; its nullspace is the column space of the matrix ${N}^{1/2}{X_{\mathrm{ext}}^{0}}$ (which is denoted ${X_{0}}$ in Lemma 6.6). The perturbed matrix ($A+\tilde{A}$ in Lemma 6.6) is ${N}^{-1/2}({C}^{\top }C-m\varSigma ){N}^{-1/2}$. The matrix B in Lemma 6.6 equals ${N}^{-1/2}\varSigma {N}^{-1/2}$. The norm of the perturbation is denoted ϵ (it is $\| \tilde{A}\| $ in Lemma 6.6). The $(n+d)\times d$ matrix which brings the minimum to (56) is ${N}^{1/2}{\widehat{X}_{\mathrm{ext}}}$. The other conditions of Lemma 6.6 are (47), (48), and (53). We have

\[\begin{aligned}{}& {\big\| \sin \angle \big({N}^{1/2}{\widehat{X}_{\mathrm{ext}}},{N}^{1/2}{X_{\mathrm{ext}}^{0}}\big)\big\| }^{2}\\{} & \hspace{1em}\le \frac{\epsilon }{0.5}\big(1+\big\| {N}^{-1/2}\varSigma {N}^{-1/2}\big\| \hspace{0.1667em}{\lambda _{\max }}\big({\big({X_{\mathrm{ext}}^{0\hspace{0.1667em}\top }}\varSigma {X_{\mathrm{ext}}^{0}}\big)}^{-1}{X_{\mathrm{ext}}^{0\hspace{0.1667em}\top }}N{X_{\mathrm{ext}}^{0}}\big)\big).\end{aligned}\]

Again, with (55), (45) and (46), we have

(57)

\[\begin{aligned}{}& {\big\| \sin \angle \big({\widehat{X}_{\mathrm{ext}}},{X_{\mathrm{ext}}^{0}}\big)\big\| }^{2}\\{} & \hspace{1em}\le {\big\| \sin \angle \big({N}^{1/2}{\widehat{X}_{\mathrm{ext}}},{N}^{1/2}{X_{\mathrm{ext}}^{0}}\big)\big\| }^{2}\\{} & \hspace{1em}\le 2\epsilon \bigg(1+\frac{\| \varSigma \| }{{\lambda _{\min }}({A_{0}^{\top }}{A_{0}})}\hspace{0.1667em}{\lambda _{\max }}\big({\lambda _{\min }}\big({A_{0}^{\top }}{A_{0}}\big){\big({X_{\mathrm{ext}}^{0\hspace{0.1667em}\top }}\varSigma {X_{\mathrm{ext}}^{0}}\big)}^{-1}{X_{\mathrm{ext}}^{0\hspace{0.1667em}\top }}{X_{\mathrm{ext}}^{0}}\big)\bigg)\\{} & \hspace{1em}=2\epsilon \big(1+\| \varSigma \| \hspace{0.1667em}{\lambda _{\max }}\big({\big({X_{\mathrm{ext}}^{0\hspace{0.1667em}\top }}\varSigma {X_{\mathrm{ext}}^{0}}\big)}^{-1}{X_{\mathrm{ext}}^{0\hspace{0.1667em}\top }}{X_{\mathrm{ext}}^{0}}\big)\big).\end{aligned}\]8.3 Proof of the convergence $\epsilon \to 0$

In this section, we prove the convergences

\[\begin{aligned}{}{M_{1}}& ={N}^{-1/2}{C_{0}^{\top }}\widetilde{C}{N}^{-1/2}\to 0,\\{} {M_{2}}& ={N}^{-1/2}\big({\widetilde{C}}^{\top }\widetilde{C}-m\varSigma \big){N}^{-1/2}\to 0\end{aligned}\]

in probability for Theorem 3.5, and almost surely for Theorems 3.6 and 3.7. As $\epsilon =\| {M_{1}^{}}+{M_{1}^{\top }}+{M_{2}}\| $, the convergences ${M_{1}}\to 0$ and ${M_{2}}\to 0$ imply $\epsilon \to 0$. End of the proof of Theorem 3.5.

It holds that

\[\begin{aligned}{}\| {M_{1}}{\| _{F}^{2}}& =\big\| {N}^{-1/2}{C_{0}^{\top }}\tilde{C}{N}^{-1/2}{\big\| _{F}^{2}}=\operatorname{tr}\big({N}^{-1/2}{C_{0}^{\top }}\tilde{C}{N}^{-1}{C_{0}}{\tilde{C}}^{\top }{N}^{-1/2}\big)\\{} & =\operatorname{tr}\big({C_{0}^{}}{N}^{-1}{C_{0}^{\top }}\tilde{C}{N}^{-1}{\tilde{C}}^{\top }\big)={\sum \limits_{i=1}^{m}}{\sum \limits_{j=1}^{m}}{c_{i}^{0}}{N}^{-1}{\big({c_{j}^{0}}\big)}^{\top }{\tilde{c}_{j}}{N}^{-1}{\tilde{c}_{i}^{\top }}.\end{aligned}\]

The right-hand side can be simplified since $\mathbb{E}{\tilde{c}_{j}}{N}^{-1}{\tilde{c}_{i}^{\top }}=0$ for $i\ne j$ and $\mathbb{E}{\tilde{c}_{i}}{N}^{-1}{\tilde{c}_{i}^{\top }}=\operatorname{tr}(\varSigma {N}^{-1})$:

\[ \mathbb{E}\| {M_{1}}{\| _{F}^{2}}={\sum \limits_{i=1}^{m}}{c_{0i}}{N}^{-1}{c_{0i}^{\top }}\operatorname{tr}\big(\varSigma {N}^{-1}\big)=\operatorname{tr}\big({C_{0}}{N}^{-1}{C_{0}^{\top }}\big)\operatorname{tr}\big(\varSigma {N}^{-1}\big).\]

The first multiplier in the right-hand side is bounded due to (50) as $\operatorname{tr}({C_{0}}{N}^{-1}{C_{0}^{\top }})\le n$, for m large enough. Now, construct an upper bound for the second multiplier:

\[\begin{aligned}{}\operatorname{tr}\big(\varSigma {N}^{-1}\big)& =\big\| {N}^{-1/2}{\varSigma }^{1/2}{\big\| _{F}^{2}}\le {\big\| {N}^{-1/2}\big\| }^{2}\big\| {\varSigma }^{1/2}{\big\| _{F}^{2}}={\lambda _{\max }}\big({N}^{-1}\big)\operatorname{tr}\varSigma \\{} & =\frac{\operatorname{tr}\varSigma }{{\lambda _{\min }}(N)}=\frac{\operatorname{tr}\varSigma }{{\lambda _{\min }}({A_{0}^{\top }}{A_{0}^{}})}.\end{aligned}\]

Finally,

The conditions of Theorem 3.5 imply that ${\lambda _{\max }}({A_{0}^{\top }}{A_{0}})\to \infty $; therefore, ${M_{1}}\stackrel{\mathrm{P}}{\longrightarrow }0$ as $m\to \infty $.

Now, we prove that ${M_{2}}\stackrel{\mathrm{P}}{\longrightarrow }0$ as $m\to \infty $. We have

Now apply the Rosenthal inequality (case $1\le \nu \le 2$; Theorem 6.8) to construct a bound for $\mathbb{E}\| {M_{2}}{\| }^{r}$:

(58)

\[\begin{aligned}{}{M_{2}}& ={N}^{-1/2}\big({\tilde{C}}^{\top }\tilde{C}-m\varSigma \big){N}^{-1/2},\\{} \| {M_{2}}\| & \le \big\| {N}^{-1/2}\big\| \hspace{0.1667em}\big\| {\tilde{C}}^{\top }\tilde{C}-m\varSigma \big\| \hspace{0.1667em}\big\| {N}^{-1/2}\big\| =\frac{\| {\textstyle\sum _{i=1}^{m}}({\tilde{c}_{i}^{\top }}{\tilde{c}_{i}^{}}-\varSigma )\| }{{\lambda _{\min }}({A_{0}^{\top }}{A_{0}^{}})}.\end{aligned}\]

\[ \mathbb{E}\| {M_{2}}{\| }^{r}\le \frac{\mathrm{const}{\textstyle\sum _{i=1}^{m}}\mathbb{E}\| {\tilde{c}_{i}^{\top }}{\tilde{c}_{i}^{}}-\varSigma {\| }^{r}}{{\lambda _{\min }^{r}}({A_{0}^{\top }}{A_{0}^{}})}.\]

By the conditions of Theorem 3.5, the sequence $\{\mathbb{E}\| {\tilde{c}_{i}^{\top }}{\tilde{c}_{i}^{}}-\varSigma {\| }^{r},\hspace{2.5pt}i=1,2,\dots \}$ is bounded. Hence

\[\begin{aligned}{}\mathbb{E}\| {M_{2}}{\| }^{r}& \le \frac{O(m)}{{\lambda _{\min }^{r}}({A_{0}^{\top }}{A_{0}^{}})}\hspace{1em}\text{as}\hspace{2.5pt}m\to \infty ,\\{} \mathbb{E}\| {M_{2}}{\| }^{r}& \to 0\hspace{1em}\text{and}\hspace{1em}{M_{2}}\stackrel{\mathrm{P}}{\longrightarrow }0\hspace{1em}\text{as}\hspace{2.5pt}m\to \infty .\end{aligned}\]

□End of the proof of Theorem 3.6.

By the Rosenthal inequality (case $\nu \ge 2$; Theorem 6.7)

\[\begin{aligned}{}\mathbb{E}\| {M_{1}}{\| }^{2r}& \le \mathrm{const}{\sum \limits_{i=1}^{m}}\mathbb{E}{\big\| {N}^{-1/2}{c_{0i}^{\top }}{\tilde{c}_{i}}{N}^{-1/2}\big\| }^{2r}+\\{} & \hspace{1em}+\mathrm{const}{\Bigg({\sum \limits_{i=1}^{m}}\mathbb{E}{\big\| {N}^{-1/2}{c_{0i}^{\top }}{\tilde{c}_{i}}{N}^{-1/2}\big\| }^{2}\Bigg)}^{r}.\end{aligned}\]

Construct an upper bound for the first summand:

\[\begin{aligned}{}{\sum \limits_{i=1}^{m}}\mathbb{E}{\big\| {N}^{-1/2}{c_{0i}^{\top }}{\tilde{c}_{i}}{N}^{-1/2}\big\| }^{2r}& \le {\sum \limits_{i=1}^{m}}{\big\| {N}^{-1/2}{c_{0i}^{\top }}\big\| }^{2r}\underset{i=1,\dots ,m}{\max }\mathbb{E}\| {\tilde{c}_{i}}{\| }^{2r}{\big\| {N}^{-1/2}\big\| }^{2r},\\{} {\sum \limits_{i=1}^{m}}{\big\| {N}^{-1/2}{c_{0i}^{\top }}\big\| }^{2r}& \le {\Bigg({\sum \limits_{i=1}^{m}}{\big\| {N}^{-1/2}{c_{0i}^{\top }}\big\| }^{2}\Bigg)}^{r}\\{} & ={\Bigg({\sum \limits_{i=1}^{m}}{c_{0i}}{N}^{-1}{c_{0i}^{\top }}\Bigg)}^{r}={\big(\operatorname{tr}\big({C_{0}}{N}^{-1}{C_{0}^{\top }}\big)\big)}^{r}\le {n}^{r}\end{aligned}\]

by inequality (50). By the conditions of Theorem 3.6, the sequence $\{\underset{i=1,\dots ,m}{\max }\mathbb{E}\| {\tilde{c}_{i}}{\| }^{2r},\hspace{2.5pt}m=1,2,\dots \}$ is bounded. Remember that $\| {N}^{-1/2}\| ={\lambda _{\min }^{-1/2}}({A_{0}^{\top }}{A_{0}})$. Thus,

\[ {\sum \limits_{i=1}^{m}}\mathbb{E}{\big\| {N}^{-1/2}{c_{0i}^{\top }}{\tilde{c}_{i}}{N}^{-1/2}\big\| }^{2r}=\frac{O(1)}{{\lambda _{\min }^{r}}({A_{0}^{\top }}{A_{0}})}\hspace{1em}\text{as}\hspace{2.5pt}m\to \infty .\]

The asymptotic relation

\[ {\sum \limits_{i=1}^{m}}\mathbb{E}{\big\| {N}^{-1/2}{c_{0i}^{\top }}{\tilde{c}_{i}}{N}^{-1/2}\big\| }^{2}=\frac{O(1)}{{\lambda _{\min }}({A_{0}^{\top }}{A_{0}})}\]

can be proved similarly; in order to prove it, we use boundedness of the sequence $\{\underset{i=1,\dots ,m}{\max }\mathbb{E}\| {\tilde{c}_{i}}{\| }^{2},\hspace{2.5pt}m=1,2,\dots \}$. Finally,

The conditions of Theorem 3.6 imply that ${\sum _{m={m_{0}}}^{\infty }}\mathbb{E}\| {M_{1}}{\| }^{2r}<\infty $, whence ${M_{1}}\to 0$ as $m\to \infty $, almost surely.

Now, prove that ${M_{2}}\to 0$ almost surely. In order to construct a bound for $\mathbb{E}\| {M_{2}}{\| }^{r}$, use the Rosenthal inequality (case $\nu \ge 2$; Theorem 6.7) as well as (58):

\[\begin{aligned}{}\mathbb{E}\| {M_{2}}{\| }^{r}& \le \frac{\mathbb{E}\| {\textstyle\sum _{i=1}^{m}}({c_{i}^{\top }}{\tilde{c}_{i}^{}}-\varSigma ){\| }^{r}}{{\lambda _{\min }^{r}}({A_{0}^{\top }}{A_{0}^{}})}\\{} & \le \frac{\mathrm{const}{\textstyle\sum _{i=1}^{m}}\mathbb{E}\| {\tilde{c}_{i}^{\top }}{\tilde{c}_{i}^{}}-\varSigma {\| }^{r}}{{\lambda _{\min }^{r}}({A_{0}^{\top }}{A_{0}^{}})}+\frac{\mathrm{const}{({\textstyle\sum _{i=1}^{m}}\mathbb{E}\| {\tilde{c}_{i}^{\top }}{\tilde{c}_{i}^{}}-\varSigma {\| }^{2})}^{r/2}}{{\lambda _{\min }^{r}}({A_{0}^{\top }}{A_{0}^{}})}.\end{aligned}\]

Under the conditions of Theorem 3.6, the sequences $\{\mathbb{E}\| {\tilde{c}_{i}^{\top }}{\tilde{c}_{i}^{}}-\varSigma {\| }^{r},\hspace{2.5pt}i=1,2,\dots \}$ and $\{\mathbb{E}\| {\tilde{c}_{i}^{\top }}{\tilde{c}_{i}^{}}-\varSigma {\| }^{2},\hspace{2.5pt}i=1,2,\dots \}$ are bounded. Thus,

\[\begin{aligned}{}\mathbb{E}\| {M_{2}}{\| }^{r}& =\frac{O({m}^{r/2})}{{\lambda _{\min }^{r}}({A_{0}^{\top }}{A_{0}^{}})}\hspace{1em}\text{as}\hspace{2.5pt}m\to \infty \text{;}\\{} {\sum \limits_{m={m_{0}}}^{\infty }}\mathbb{E}\| {M_{2}}{\| }^{r}& <\infty ,\end{aligned}\]

whence ${M_{2}}\to 0$ as $m\to \infty $, almost surely. □End of the proof of Theorem 3.7.

The proof of the asymptotic relation

\[ \mathbb{E}\| {M_{1}}{\| }^{2r}=\frac{O(1)}{{\lambda _{\min }^{r}}({A_{0}^{\top }}{A_{0}^{}})}\hspace{1em}\text{as}\hspace{2.5pt}m\to \infty \]

from Theorem 3.6 is still valid. The almost sure convergence ${M_{1}}\to 0$ as $m\to \infty $ is proved in the same way as in Theorem 3.6.Now, show that ${M_{2}}\to 0$ as $m\to \infty $, almost surely. Under the condition of Theorem 3.7,

\[ \mathbb{E}{\big\| {\tilde{c}_{m}^{\top }}{\tilde{c}_{m}^{}}-\varSigma \big\| }^{r}=O(1),\hspace{2em}{\sum \limits_{m={m_{0}}}^{\infty }}\frac{\mathbb{E}\| {\tilde{c}_{m}^{\top }}{\tilde{c}_{m}^{}}-\varSigma {\| }^{r}}{{\lambda _{\mathrm{min}}^{r}}({A_{0}^{\top }}{A_{0}^{}})}<\infty ,\]

and $\mathbb{E}{\tilde{c}_{i}^{\top }}{\tilde{c}_{i}^{}}-\varSigma =0$. The sequence of nonnegative numbers $\{{\lambda _{\min }}({A_{0}^{\top }}{A_{0}}),\hspace{2.5pt}m=1,2,\dots \}$ never decreases and tends to $+\infty $. Then, by the Law of large numbers in [16, Theorem 6.6, page 209]

\[ \frac{1}{{\lambda _{\mathrm{min}}}({A_{0}^{\top }}{A_{0}^{}})}{\sum \limits_{i=1}^{m}}\big({\tilde{c}_{i}^{\top }}{\tilde{c}_{i}}-\varSigma \big)\to 0\hspace{1em}\text{as}\hspace{2.5pt}m\to \infty \text{,}\hspace{1em}\text{a.s.,}\]

whence, with (58),

\[\begin{aligned}{}\| {M_{2}}\| & \le \frac{\| {\textstyle\sum _{i=1}^{m}}({\tilde{c}_{i}^{\top }}{\tilde{c}_{i}}-\varSigma )\| }{{\lambda _{\min }}({A_{0}^{\top }}{A_{0}^{}})}\to 0\hspace{1em}\text{as}\hspace{2.5pt}m\to \infty \text{, a.s.;}\\{} {M_{2}}& \to 0\hspace{1em}\text{as}\hspace{2.5pt}m\to \infty ,\hspace{1em}\text{a.s.}\end{aligned}\]

□8.4 Proof of the uniqueness theorems

Proof of Theorem 4.1.

The random events 1, 2 and 3 are defined in the statement of this theorem on page . The random event 1 always occurs. This was proved in Section 2.2 where the estimator ${\widehat{X}_{\mathrm{ext}}}$ is defined. In order to prove the rest, we first construct the random event (59), which occurs either with high probability or eventually. Then we prove that, whenever (59) occurs, there is the existence and “more than uniqueness” in the random event 3, and then prove that the random event 2 occurs.

Now, we construct a modified version ${\widehat{X}_{\mathrm{ext}}^{\mathrm{mod}}}$ of the estimator ${\widehat{X}_{\mathrm{ext}}}$ in the following way. If there exist such solutions $(\Delta ,{\widehat{X}_{\mathrm{ext}}})$ to (7) & (8) that $\| \sin \angle ({\widehat{X}_{\mathrm{ext}}},{X_{\mathrm{ext}}^{0}})\| \ge {(1+\| {X_{0}}{\| }^{2})}^{-1/2}$, let ${\widehat{X}_{\mathrm{ext}}^{\mathrm{mod}}}$ come from one of such solutions. Otherwise, if for every solution $(\Delta ,{\widehat{X}_{\mathrm{ext}}})$ to (7) & (8) $\| \sin \angle ({\widehat{X}_{\mathrm{ext}}},{X_{\mathrm{ext}}^{0}})\| <{(1+\| {X_{0}}{\| }^{2})}^{-1/2}$, let ${\widehat{X}_{\mathrm{ext}}^{\mathrm{mod}}}$ come from one of these solutions. In any case, let us construct ${\widehat{X}_{\mathrm{ext}}^{\mathrm{mod}}}$ in such a way that it is a random matrix. It is possible; that follows from [17].

Thus we construct a matrix ${\widehat{X}_{\mathrm{ext}}^{\mathrm{mod}}}$ such that:

-

1. ${\widehat{X}_{\mathrm{ext}}^{\mathrm{mod}}}$ is a $(d+n)\times n$ random matrix;

-

3. if $\| \sin \angle ({\widehat{X}_{\mathrm{ext}}^{\mathrm{mod}}},{X_{\mathrm{ext}}^{0}})\| <{(1+\| {X_{0}}{\| }^{2})}^{-1/2}$, then $\| \sin \angle ({\widehat{X}_{\mathrm{ext}}},{X_{\mathrm{ext}}^{0}})\| <{(1+\| {X_{0}}{\| }^{2})}^{-1/2}$ for any solution $(\Delta ,{\widehat{X}_{\mathrm{ext}}})$ to (7) & (8).

From the proof of Theorem 3.5 it follows that $\| \sin \angle ({\widehat{X}_{\mathrm{ext}}^{\mathrm{mod}}},{X_{\mathrm{ext}}^{0}})\| \to 0$ in probability as $m\to \infty $. From the proof of Theorem 3.6 or 3.7 it follows that $\| \sin \angle ({\widehat{X}_{\mathrm{ext}}^{\mathrm{mod}}},{X_{\mathrm{ext}}^{0}})\| \to 0$ almost surely. Then

either with high probability or almost surely.

Whenever the random event (59) occurs, for any solution Δ to (7) and the corresponding full-rank solution ${\widehat{X}_{\mathrm{ext}}}$ to (8) (which always exists) it holds that $\| \sin \angle ({\widehat{X}_{\mathrm{ext}}},{X_{\mathrm{ext}}^{0}})\| <{(1+\| {X_{0}}{\| }^{2})}^{-1/2}$, whence, due to Theorem 8.3, the bottom $d\times d$ block of the matrix ${\widehat{X}_{\mathrm{ext}}}$ is nonsingular. Right-multiplying ${\widehat{X}_{\mathrm{ext}}}$ by a nonsingular matrix, we can transform it into a form $(\begin{array}{c}\widehat{X}\\{} -I\end{array})$. The constructed matrix $\widehat{X}$ is a solution to equation (9) for given Δ. Thus, we have just proved that if the random event (59) occurs, then for any Δ which is a solution to (7), equation (9) has a solution.